Since Facebook and Twitter gained prominence there has always been the debate of whether Google pay attention to social signals as part of their algorithm. Matt Cutts denied this in 2014.

Since Facebook and Twitter gained prominence there has always been the debate of whether Google pay attention to social signals as part of their algorithm. Matt Cutts denied this in 2014.

Although Google+ used to have an impact on page visibility in the algorithm through rel=”author” and even though it has no impact when signed out of Google now, it still has an impact on the SERPs when you’re signed in. Twitter has 302 million users, Google+ has over 540 million and Facebook has over 1 billion, yet none of this data is used directly in Google’s algorithm.

A recent blog post by Bill Slawski examined a patent by Google that was looking at recommendations for “photogenic locations to visit”. It touched on a “global interestingness score” which might be ”determined for each of the plurality of photographs included in a cluster based on one or more signals indicative of online activity associated with such photograph.”

Bill translated this as meaning that if a photo was shared from one social profile and this was reflected across others (Twitter, Facebook, Instagram etc.) then that would increase its interestingness score. If this score got high enough, it could then be put forward for the algorithm via Google Now or even Google Maps as a recommended location for photography. Essentially saying that if something is shared on social and amplified across other social profiles and networks, then it could be considered to have a ‘global interestingness score’.

Google and Twitter recently joined forces again via Twitter’s Firehose and I wonder if they will use this to further enhance Google’s algorithm. For example, why can’t tweets to content be a signal in the same way trusted links are?

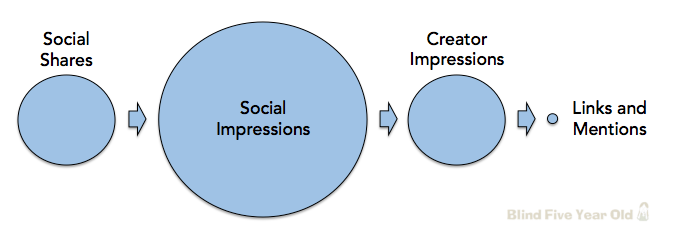

AJ Kohn perfectly described how the Social Echo model works under Google’s current algorithm. This shows how a real quality piece of work can generate very few backlinks from social exposure. Sure the content could get 1000’s of referral visits, which could be spread across numerous social sources as the piece is amplified. But the big thing is, very little content actually gets linked to from users finding it socially.

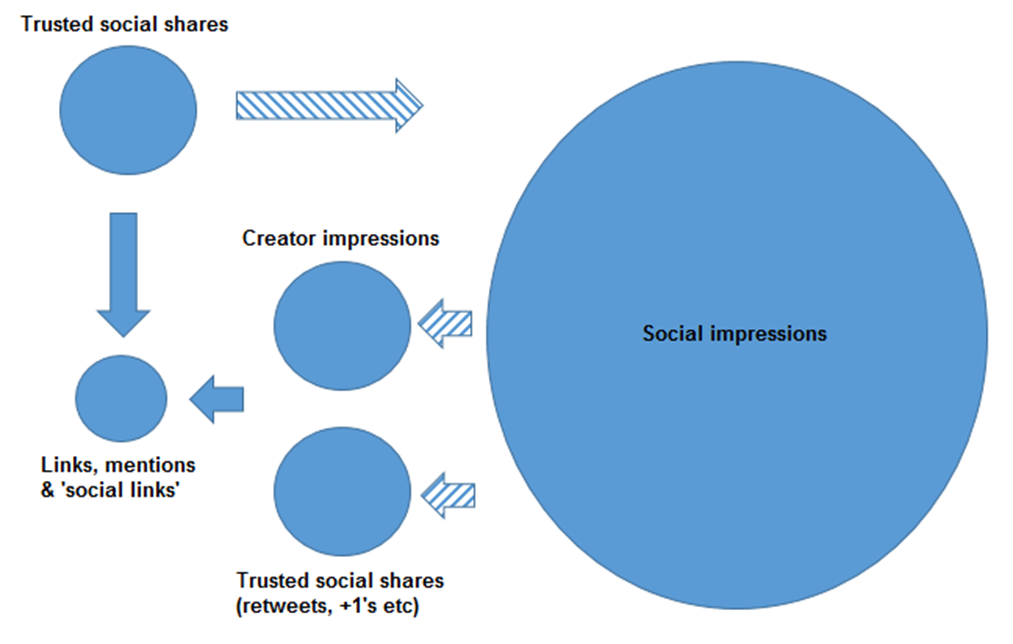

We know that links from trusted websites weigh heavy in the algorithm but tweets only have an indirect impact on Google giving a quality piece of content any uplift in the algorithm. What if Google followed links from certain, trusted social sources? Using Twitter as an example, a trusted social source could be a profile that is either verified or has numerous trusted backlinks.

Currently a piece of content may get tweeted by a trusted profile, retweeted by another ten accounts with trust and reach 100,000s of other social users (that are lower than the required trust threshold), but once that content has been digested, there is little likelihood of it ever been linked to. At the moment Google only uses the data from this indirect causation to help rank that content!

Take a look at AJ Kohn’s model below, which roughly shows what currently happens.

If Google were to start following links from trusted social profiles then the model would have to change slightly. It would have to incorporate ‘social links’ and retweets from trusted sources. This would increase the links that shared quality content has in Google’s algorithm, further distancing it from its inferior counterparts, helping Google to create an even better picture of what content should rank where in the SERPs.

If these changes did happen, the new model could look like this.

The above model would ensure that quality content gets its true position in the SERPs because it’d get link and trusted social equity.

We tweet around 500 million times per day. Our social profiles are now a part of our waking conscious and have replaced the sounding boards that blogs once were (but always will be, just to a smaller degree).

We still share and link to content, we’re just doing it on social mediums.

What Google needs to ask itself is: should ‘social links’ from trusted Twitter profiles be as important to their algorithm as backlinks from authoritative websites?

If Google can further its partnership with Twitter and use its own data from Google+, I see no reason why ‘social links’ cannot be included within the algorithm. This change would help Google to fairly rank the quality content that is shared around the web.

aaron says

If/when/as Google buys Twitter then they will claim to be able to create important signals from it, but so long as they don’t own it outright, they have little to no incentive to put much trust in it as a link source. Many years ago Matt Cutts even reached out to Ev Williams to inform him of the problem of Twitter profile links being dofollow. If Google didn’t want to trust the profile links, then they probably won’t want to put much weight in the links in the post either.

Some social exposure “signals” are aligned with the ad units of the competing social networks. There is no way Google wants to subsidize competing ad networks.

Ajay Prasad says

But Andrew, many people tend to retweet and share posts without even reading it and believe me I have seen such examples. Suppose if a content is not that much or introduced nothing new but gets shared just because of the brand or hashtags associated with it, then what? But still, Google can include social interactions and mentions as a signal and there’s no reason why it won’t.

Nice takeaway anyway, Andrew.

Andrew Akesson says

Hi Ajay

Thanks for the comment. This is something that crossed my mind, but if you take it from the standpoint that highly trusted social sources are like a brand and they aren’t likely to tweet about something for the sake of it..This could be backed up with the amount of trusted profiles that retweet the original tweet as well, which could weigh heavier in the algorithm and remove all element of the problem of people not reading what they are tweeting.