Note: We have many brand new quotes about Panda from Google attributed to a “Google Spokesperson.” These are all quotes given directly to the author and The SEM Post by Google. All other Google quotes are cited to their sources.

Google Panda is one of Google’s ranking filters that seeks to downrank pages that are considered low quality, which results in sites with higher quality and valuable content to rise higher in the search results. But it is easily one of the most misunderstood algos.

Simply put, as a Google spokesperson told us, “Panda is an algorithm that’s applied to sites overall and has become one of our core ranking signals. It measures the quality of a site, which you can read more about in our guidelines. Panda allows Google to take quality into account and adjust ranking accordingly.”

[NOTE: There is more about Google making Panda part of the core ranking algo here]

There have been many SEOs who have attempted to reverse engineer the Panda algo by examing sites that are the “winners” and “losers” every time we see an update or refresh, but this is a case where correlation does not equal causation.

There are also so many Panda myths reported as fact, which ends up resulting in webmasters attempting to “fix” Panda, but which ends up hurting a site even more. The long-held belief that one must remove all low-quality content from a site to recover is a perfect example of this.

Throw into the mix the current state of a longer Panda rollout, it makes it harder to identify possible reasons behind whether Panda impacts a site at all, or if it is from one of the hundreds of ranking signals that Google changes or updates every year.

Here is what we know about Google Panda, as confirmed by Google themselves so that webmasters who feel Panda impacts them can recover while not shooting themselves in the foot in the process.

Contents [hide]

- 1 Panda’s Impact on Content

- 1.1 Rethinking thin content and Google Panda

- 1.2 Removing the wrong content

- 1.3 Removing versus fixing

- 1.4 When Removal is Needed

- 1.5 Adding New Quality Content to Compensate

- 1.6 Panda Hit Sites Can Still Rank

- 1.7 Do all pages need to be high quality for the site to be high quality?

- 1.8 Expectations

- 1.9 Duplicate content

- 1.10 Similar content

- 1.11 404s – Not Found Pages

- 1.12 Aggregated Content

- 1.13 Look at a site with new eyes

- 2 Word Count & On Page Factors

- 3 Advertising & Affiliates

- 4 User-Generated Content

- 5 Technical Aspects of Panda?

Panda’s Impact on Content

Rethinking thin content and Google Panda

Recently Gary Illyes from Google said that he does not recommend removing content as a way to clean up from Panda. And while some SEOs do not agree with this (some quite vocally), it does make perfect sense. Removing content because you feel it could be negatively impacting the site from a Panda perspective could actually be making a site’s search problems even larger.

@jenstar We don't recommend removing content in general for Panda, rather add more highQ stuff @shendison

— Gary Illyes (@methode) October 7, 2015

Removing the wrong content

The biggest issue is that webmasters could be removing content that is fine in Google’s eyes, and is content that is ranking and driving traffic. Unless you are going through every piece of content and checking for Google referrals, you could be removing content that is performing well, but it might be long tail enough that it isn’t as noticeable.

Using Search Analytics in Google Search Console is a good way to determine what pages are getting traffic and identify potential candidates for content that needs improving due to zero Google referral traffic.

A Google spokesperson also took it a step further and suggested using it also to identify pages where the search query isn’t quite matching the delivered content. “If you believe your site is affected by the Panda algorithm, in Search Console’s Search Analytics feature you can identify the queries which lead to pages that provide overly vague information, or don’t seem to satisfy the user need for a query.”

Added up, removing the wrong content can have a significant negative impact, both because you are removing content that Google sees as quality and because you are also losing the traffic Google was sending to those pages. And depending on the number of pages removed, it can seem that the site is continuing to lose traffic due to Panda or other algo changes when in reality it is the removal of those pages that caused it.

There is also the possibility that another search engine might think the content you are removing is awesome, and you could lose that traffic as well. Often when people look at search engine referrals, they only look at Google because of the market share, but especially if some of the site is impacted by Panda, that search share can look quite a bit different for some sites.

Removing versus fixing

When Illyes kicked off the firestorm with his comment that thin content should be fixed not deleted, it did get many people rethinking the “remove all the things” strategy that many Panda experts were recommending. But going back years when either Illyes or John Mueller addressed the topic of thin content and how to fix it, it was always add new quality content and fix the old. Never was removing the content one of the suggestions. Instead, if someone was determined to remove content, it was suggested to merely prevent that content from being indexed via robots.txt or NOINDEX.

In fact, this seems to be Google’s only suggested course of action for thin content for those not inclined to improve it – stop it from being indexed, but no mention of removing it completely.

John Mueller in a Google Webmaster Office Hours in March 2014 did make reference to removal, but this was in the context of republishing aggregated data from an RSS feed or user generated comments.

Overall the quality of the site should be significantly improved so we can trust the content. Sometimes what we see with a site like that will have a lot of thin content, maybe there’s content you are aggregating from other sources, maybe there’s user generated content where people are submitting articles that are kind of low quality, and those are all the things you might want to look at and say what can I do; on the one hand, hand if I want to keep these articles, maybe prevent these from appearing in search.

Maybe use a noindex tag for these things. Maybe you could remove them completely and say this is so low quality I don’t want to have my name associated with that and remove them completely from your website. But that’s something where you need to take a really strong and honest look at your website and think about what you can do to improve things.

Outside of this, the mantra from Google has been to improve or noindex.

Focusing on noindexing the content still means users can find the content on their own on the site if they have need of it, and you can also track these noindexed pages to see if they are still getting traffic from the natural flow on the site. This could also be a sign that the content was likely noindexed for being thin content out of error.

This is something that Maile Ohye was recommending back as far back as 2011 when Panda originally launched. She suggested that webmasters temporarily noindex lower quality pages, then remove the noindex as each page has unique content added.

A Google spokesperson also said this, when referring to lower quality pages. “Instead of deleting those pages, your goal should be to create pages that don’t fall in that category: pages that provide unique value for your users who would trust your site in the future when they see it in the results.”

When Removal is Needed

That said, there are some instances where removal might be the best course of action. For example, if a forum has become overrun with spam, there is no real way to improve upon a spam post to “buy Viagra” or “buy Ugg boots.”

The same can be said if a site is merely republishing RSS feeds, unless the webmaster wants to go and change all of those republished articles into new fresh content about each of those articles. As Google has said before, useless is useless.

At Pubcon, Gary Illyes did give best practices for those who do want to remove content rather than improving it. He suggests noindexing the content, then adding those pages to the site’s sitemap so Google processing the noindex quicker.

Adding New Quality Content to Compensate

Adding new content to a site is a great solution to Panda as well. A site will always benefit from new quality content, even if Panda impacts some of their content negatively.

Panda Hit Sites Can Still Rank

There is a huge misconception that if Panda hits a site, none of it will rank. But this isn’t accurate.

What most people are seeing are sites that have content that is overwhelmingly poor quality, so it can seem that an entire site is affected. But if a site does have quality content on a page, those pages can continue to rank.

A Google spokesperson confirmed this as well.

The Panda algorithm may continue to show such a site for more specific and highly-relevant queries, but its visibility will be reduced for queries where the site owner’s benefit is disproportionate to the user’s benefit.

This is another reason Google continues to say removing poor quality content is not the best option in many cases. Removing spam is fine, but if the quality is just poor, adding additional content – both on those pages and as new quality pages – can help.

Do all pages need to be high quality for the site to be high quality?

While a site can still rank on good quality pages despite some pages being impacted by Panda, likewise, a site can be considered great quality even if there are some low quality pages. Instead, it wants to see a site with the vast majority of pages classified as high quality.

A Google spokesperson told us, “In general high quality sites provide great content for users on the vast majority of their pages.”

So a site with a handful of low quality pages can still be considered an overall great site, so while it would always be recommended to improve low quality pages, there isn’t a major concern that webmasters should worry about if a few pages are lower quality than the rest. That said, webmasters would need to ensure that it really is only a handful of pages, and not a majority of them.

Expectations

Are you meeting your searcher’s expectations? Google has said a lot about making sure you are ranking for keywords you have satisfying content for. You might have an amazing piece of content, but if you are ranking for particular queries that don’t give people what they are looking for, Google could perceive it as poor quality content.

A Google spokesperson put it more simply, “At the end of the day, content owners shouldn’t ask how many visitors they had on a specific day, but rather how many visitors they helped.” This is similar to what Illyes said at Pubcon during his keynote in October 2015.

You should ask "how many visitors you helped" not "how many visitors you had today." @methode #pubcon

— Jennifer Slegg (@jenstar) October 8, 2015

Duplicate content

Many people had assumed that the duplicate content filter (yes, it is just a filter, not a penalty) was a core part of Panda, but it is not. John Mueller confirmed last month that Panda and duplicate content are “two separate and independent things.”

Of course, regardless of whether it is a Panda signal or not, it is best practice to clean up those duplicates, especially since dupes on a massive scale can result in a manual action being taken on the site.

Even from an SEO perspective, when Mueller was answering questions about a Panda-hit site, and one of the issues raised by the site owner was the duplicates on the site, he said that is a low priority compared to ensuring the content on the site is the best of its kind.

If you were to ask me where to prioritize my time for this website, I would put cleaning up “technical duplication” like that somewhere in the sidebar or even quite low on the list. Sure, it’s worth doing, but it’s not going to be the critical factor. Our algorithms are pretty good at dealing with duplication within a website. If you are trying to sell a car, then washing it is a good idea — but if the car has serious mechanical issues, then washing it isn’t going to get it sold.

In other words, internal duplication isn’t a factor, especially in the world of unsavvy WordPress site owners accidently duplicating many pages. It is just a minor piece in the algo puzzle.

Similar content

Particularly for “how to” sites, you might want to take a closer look at your content, particularly if you have many pieces of content that are very similar. Often when there is an abundance of articles on an almost identical topic, the quality of that content will probably greatly vary.

For example, eHow has over 100 articles (and probably more, I stopped counting at 100) on how to unclog a toilet. Unless a website is trying to be the most amazing uncloggingtoilets.com website of all time, then it probably makes sense to see how some of those pages could be combined – especially since it is very unlikely Google is sending traffic to all 100 pages.

Check your Google referrals to those pages and see what is getting traffic from Google, because that will be a signal that Google thinks those pages are good enough to rank and send traffic to. But take a close look at the rest of those pages that have zero traffic from Google.

If you have a lot of similar content and are also having Panda issues, it makes sense to combine some of those articles that aren’t ranking or getting traffic from Google to raise the quality, then redirect the old URLs.

404s – Not Found Pages

For those that have many 404s, either from a crawl issue or from removing pages, 404s don’t have an effect on Panda at all.

@Photogkris nope @JohnMu

— Gary Illyes (@methode) August 11, 2015

This makes total sense. After all, it would be very easy for a competitor to point a ton of links at non-existent URLs if Google did consider 404s any kind of Panda – or core algo – signal.

Aggregated Content

Google seems to see very little value in aggregators – with a few notable exceptions.

John Mueller commented on the lack of value in aggregators and says searchers are better off going to the sites listed directly. There is little value in Google’s eyes in making a searcher click twice to find what they want.

One thing you really, really also need to take into account is that our algorithms focus on unique, compelling, and high-quality content. If a site is just aggregating links to other sites, then there’s not really much value in showing that in search — users would be better served to just go to the destination directly. So instead of just focusing on technical issues like crawling and indexing, I’d strongly recommend taking a step back and rethinking your site’s model first.

There are some notable exceptions – Techmeme.com would be an example of an aggregator that does well in Google.

Look at a site with new eyes

One of the things John Mueller has recommended multiple times over the years when it comes to a webmaster needing to learn whether the content is quality or not is to have someone unconnected with the website visit the site and then give feedback.

With regards to the other issues you mentioned, I’d also recommend going through Amit’s 23 questions together with someone who’s not associated with your website, for example after having them complete various tasks on your site and on other similar sites. As you mention Panda, which is an algorithm that focuses on the quality of the content, I’d also like to suggest that you take a good look at the quality of your own content.

Many SEOs feel they have awesome content, whether they wrote it themselves or hired someone, but it can sometimes be hard to see past your preconceived notions of how awesome their site is.

Word Count & On Page Factors

Rethinking word count

While it is a good rule of thumb to ensure that any new content you create is over a certain word count, a low word count does not equal content that will get hit by Panda. In fact, there is a lot of content that Google not only thinks is quality, but content that Google is also rewarding with featured snippets.

John Mueller commented that there is no minimum word count when it comes to gauging content quality.

There’s no minimum length, and there’s no minimum number of articles a day that you have to post, nor even a minimum number of pages on a website. In most cases, quality is better than quantity. Our algorithms explicitly try to find and recommend websites that provide content that’s of high quality, unique, and compelling to users. Don’t fill your site with low-quality content, instead work on making sure that your site is the absolute best of its kind.

And if it did come down to word count, it would be so incredibly easy for spammers to game that fact. So it makes complete sense that word count isn’t a “content under X words is Pandalized” situation. But yet the poor recommendation from experts to remove content based on word count lives on.

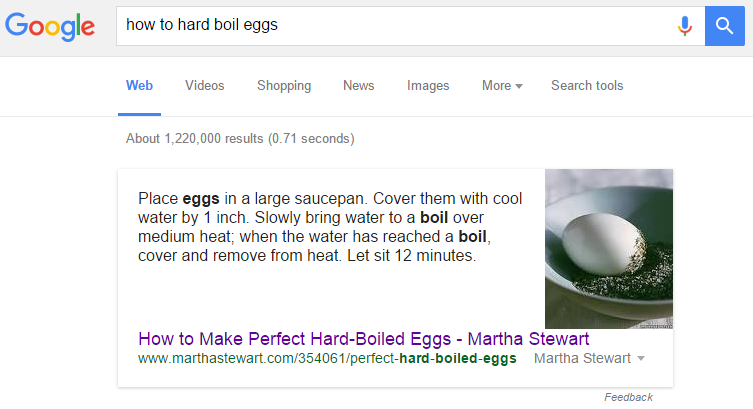

Case in point: “How to hard boil eggs”

Here is a featured snippet and when you go to the actual webpage and remove the extraneous content such as navigation and related articles, you are left with an article of only 139 words.

Based on the theory that anything under 250 words is “thin”, then this site, if it had removed this content for being thin, not only would have lost rankings but also a featured snippet.

Based on the theory that anything under 250 words is “thin”, then this site, if it had removed this content for being thin, not only would have lost rankings but also a featured snippet.

There are many other examples of pages with low word count, but because they answer the question fully, they not only rank well but grab a featured snippet too.

Content Can Take Many Forms

Some people feel that image sites are unfairly penalized by Panda because their content isn’t written. But John Mueller says that content can take many forms and that a site primarily in images (or videos, etc) doesn’t automatically pose a problem.

Generally speaking, when our algorithms look out for unique, compelling, and high-quality content, that can have many forms, including images, videos, or other kinds of embedded rich-media. That said, as Scott mentioned, it helps us a lot to have context of any content like that, and we generally pick up that context in the form of text on pages that embed that kind of content. We have more recommendations at http://support.google.com/webmasters/bin/answer.py?hl=en&answer=114016 and a recent blog post at http://googlewebmastercentral.blogspot.de/2012/04/1000-words-about-images.html

At any rate, having a site that is primarily focused on images is not a problem by itself.

So having content that is not just the written word doesn’t mean it is at an automatic greater risk.

Advertising & Affiliates

Advertising and affiliate links do have a role to play in Panda, but primarily in how it detracts from the star of the page – the content.

And we see many sites that are often proclaimed to be “Panda losers” when we see a Panda refresh or update that do tend to have an overabundance of ads or affiliate links in all, or at least a significant portion, of their content. And all too often this content isn’t created with the user’s experience in mind, but rather how much revenue they can squeeze out of that user.

Gary Illyes recently said at Pubcon that webmasters shouldn’t be asking how many visitors they had on a site, but rather how many visitors they helped. And this is often contradictory to what we see happening on many sites in reality. Far too many websites are trying to get more benefit from the user than the user gets back by visiting the website in the first place.

A Google spokesperson agrees with this assessment, and how it not only applies to sites heavy on ads or affiliate links, but to content that is poorly created or reused from other sites.

An extreme example is when a site’s primary function is to funnel users to other sites via ads or affiliate links, the content is widely available on the internet or it’s hastily produced, and is explicitly constructed to attract visitors from search engines. The Panda algorithm may continue to show such a site for more specific and highly-relevant queries, but its visibility will be reduced for queries where the site owner’s benefit is disproportionate to the user’s benefit.

Affiliate sites, in general, don’t have a signal that means Panda scrutinizes them harder than a regular non-affiliate site. But many affiliate sites just aren’t creating the type of content that Panda loves. And the same can be said for sites that are hitting their visitors over the head with mass amounts of advertising.

Advertising

Similar to how Google views advertising in the quality guidelines, as long as it isn’t obtrusive it shouldn’t be an issue where Panda is concerned. After all, we also have the page layout algo, an algorithm that looks at where ads are positioned on a page and downranks pages with excessive ads “above the fold.”

The key seems to be how noticeable and especially how obtrusive those ads are to the user experience.

A year ago, in a hangout with John Mueller, he was asked by Barry Schwartz about advertising on websites, specifically on SERoundtable, and about the possible impact of Panda on his site. Mueller had this to say:

At least speaking from myself personally, it’s not something that I noticed. If these ads are on these pages, and they’re essentially not in the way, they don’t kind of break the user experience of these pages, then I see no real problem with that.

It would be a little bit more tricky if these were for example adult content ads, where our adult content algorithms would look at this page and say well, primary topic isn’t adult oriented, but there are a lot of adult content ads on here, therefore we don’t really know how to fit this into our safe search filter and say is this adult content. I haven’t seen anything like that on Barry’s site, so at least from my point of view that is not something I’d worry about there.

People often associate having heavy ads above the fold as being a Panda factor, but this is part of a core algo commonly called the Above the Fold algo, targeting sites that place so many ads above the fold that a user is required to scroll to see the content.

Affiliate Links

John Mueller has talked about affiliate marketing as it pertains to Panda and quality. Being an affiliate site or having affiliate links is not going to trigger Panda on a site, nor is there any downranking applied to affiliate sites. Mueller has said this several times in his Hangouts.

Of course affiliate sites can be really useful, they can have a lot of great information on them and we like showing them in search.

However, many affiliate sites tend to have the same characteristics of what we see with Panda sites.

Many seem to feel it is problematic once you start having multiple affiliate links within the content, and that content is lower quality, particularly if the site has many pages with a similar setup.

But at the same time we see a lot of affiliates who are basically just lazy people who copy and paste the feeds that they get and publish them on their websites. And this kind of lower quality content, thin content, is something that’s really hard for us to show in search. Because we see people, sometimes like hundreds of people taking the same affiliate feed, republish it on their websites and of course, they are not all going to all rank number one for those terms.

In other words, you need to give Google a reason to want to show your content over someone else’s for your search terms.

Most of the best affiliate sites are ones with great content that sets it apart from other affiliate and non-affiliate sites. They aren’t simply bringing in traffic for the sale – or at least it doesn’t appear that way to the searcher.

There are also benefits from traffic even if it doesn’t convert into a click on an affiliate link. Maybe they share it on social media, maybe they recommend it to someone, or they return at a later time, remembering the good user experience from the previous visit.

A Google spokesperson also said, “Users not only remember but also voluntarily spread the word about the quality of the site, because the content is produced with care, it’s original, and shows that the author is truly an expert in the topic of the site.” And this is where many affiliate sites run into problems.

Just like a non-affiliate site, pages on an affiliate site need to help the user, not simply help the webmaster. Some sites go overboard with the affiliate links, especially when pumping out shallow content such as “top ten gifts for …” with a couple sentences for each along with a huge Amazon affiliate block. Many of these are a poor user experience, and many find they don’t bring in much revenue since they do tend to turn off users. And they very rarely add anything of value.

Beware adding ads/affiliate links to compensate for lost traffic

Some webmasters who have been hurt by Panda or other algorithmic changes tend to try to overcompensate with an overabundance of ads and affiliate links to make up the lost revenue from the traffic, or they go ahead and make even more seemingly low-quality articles for the sole purpose of being able to place affiliate links. But this can hurt the overall user experience and perceived quality of the site.

If you suspect you are negatively impacted over affiliate links, the smart solution is to drop them and see if that improves Google search referrals. And if you decide to return any affiliate links to the content, definitely check your affiliate reports. Drop all links that have never seen a click or not had a click in recent months and start by adding back your highest revenue links. But don’t forget to check Google referrals. Pages with affiliate links that are still getting traffic from Google have probably passed the Panda test.

But remember, affiliate links are only one piece of the puzzle. Removing affiliate links from crap content won’t magically make that content “non-crappy.”

User-Generated Content

User generated content and how it affects Panda has been a hot topic recently, and it has gotten to the point where many SEOs are recommending the removal of all user-generated content, claiming Google sees user generated content as poor quality. But this is actually far from the truth.

There are many sites with primarily user-generated content that do very well in the Google search results, even to the point of nabbing featured snippets for search queries.

Google agrees that there are great quality sites that are user generated. A Google spokesperson told us, “Sometimes such sites may also have an active community that creates high quality content. At the end of the day, content owners shouldn’t ask how many visitors they had on a specific day, but rather how many visitors they helped.”

But there is a danger that sites are hurting themselves with this “remove all user generated content” push, even when that content is helping their visitors.

UGC Quality

There is nothing wrong with user generated content as long as it is quality user generated content. Even John Mueller has suggested that UGC is a perfectly fine way to build content.

Some of this could even be built up by loyal fans of your website, don’t discount the willingness of users wanting to help improve a website that they already enjoy :).

Stackoverflow is an example of a site that is almost entirely user generated, yet which is clearly high quality.

Special Case for Forums?

But there is a definite concern about UGC, particularly when it comes to forums. And while Gary Illyes recently said that you are best NOT to remove content as a Panda recovery method, John Mueller offers a solution for those who might have some great forum content but with some less than stellar content thrown in – but content that might be useful to visitors on the site. After all, if you start removing threads on a forum that you consider low quality, there becomes the whole issue of censorship, which can quickly cause issues with those who are regulars on a forum and are producing quality content, referrals and the like.

John Mueller has a better option for those who are concerned about the quality of some forum posts and how it might impact Panda.

Another thing you didn’t mention, but which is common with forums is low-quality user-generated content. If you have ways of recognizing this kind of content, and blocking it from indexing, it can make it much easier for algorithms to review the overall quality of your website. The same methods can be used to block forum spam from being indexed for your forum. Depending on the forum, there might be different ways of recognizing that automatically, but it’s generally worth finding automated ways to help you keep things clean & high-quality, especially when a site consists of mostly user-generated content.

Maile Ohye suggested in 2011 at SMX that sites with questions – as many starting posts on a forum thread are, but could also apply to “how to” type of sites – that those pages remain noindexed until there is an answer or solution. Once that content is on the page, then remove the no index.

Community Guidelines

Could user guidelines or “house rules” for a forum – or any other platform that allows users to submit content – solve some of the “thin content” issues that many associate with forums. Mueller mentions that the quality forums he belongs to as having an active community that take action when there are problems with new members and the posts they are making.

One of the difficulties of running a great website that focuses on UGC is keeping the overall quality upright. Without some level of policing and evaluating the content, most sites are overrun by spam and low-quality content. This is less of a technical issue than a general quality one, and in my opinion, not something that’s limited to Google’s algorithms. If you want to create a fantastic experience for everyone who visits, if you focus on content created by users, then you generally need to provide some guidance towards what you consider to be important (and sometimes, strict control when it comes to those who abuse your house rules).

When I look at the great forums & online communities that I frequent, one thing they have in common is that they (be it the owners or the regulars) have high expectations, and are willing to take action & be vocal when new users don’t meet those expectations.

Don’t forget you can also do things like set forum permissions to require a specific character count per post. This way, instead of someone posting a “yep!” response, they might go into a bit more detail of “yes, this works because ____”. It adds content but also adds value.

The same also applies to user profiles too… limit indexing on those pages to only those who have X number of posts. This way people can still find specific profiles for popular or prolific members while you don’t end up with hundreds or thousands of mostly empty profiles indexed… and it also solves the profile spam issue.

Forum Titles

On a related note, he does also suggest that forum owners or trusted moderators/admins be allowed some editing privileges to rewrite the title. There are many times a forum thread is started with the title/subject being something along the lines of “HELP!!!” or “What should I do?”. And rewriting it isn’t just for search engines, but for users too. Google wants to send you traffic, but they also need to serve results that are useful to their searchers, and no matter which way you slice and dice it, a title of help isn’t going to be useful to anyone.

Depending on your forum, it might make sense to allow the admins to rewrite the title or description. For example, if your forum provides product support, then instead of just taking a user’s question 1:1, maybe it could be transformed into something more generic, or easier to understand, for the title or description.

Another excellent recommendation to improve titles on a forum, particularly if it is a help type forum, is to include the word “[SOLVED]” or something similar. While Google does rewrite titles, it isn’t a guarantee Google will show it, but it can be an extremely helpful signal for searchers looking to solve a problem.

Title: As with the description, our algorithms sometimes rewrite the title tag on the page, sometimes even depending on the query the user made ( https://support.google.com/webmasters/answer/35624?hl=en ). While it’s possible to add something like “solved” to the title, it’s also not guaranteed that we’ll show it in all search results.

It could be added to the start or the end of the title – some won’t want to risk moving title keywords later in the title tag – but ensure that it would be viewable in the search results if it is a long title. This is something that works well – and ranks well – on many sites, with computer help sites being the most visible example to searchers.

If someone has a computer problem, a title tag that shows the problem is resolved on the landing page would be a much more appealing result than one where the searcher doesn’t know from the title or snippet whether the solution is inside or not.

Remove forums completely?

There has also been a recent push by some experts to mass remove forums, implying that all forum content is poor quality. But in reality, nothing could be further from the truth.

The same can be said for any website – there are some sites that are amazingly awesome and some that are complete crap. And you see the same thing on forums. Sites can lose a great deal of quality content if they decide to do a blanket noindex or removal of all forums as a Panda recovery method.

In a recent discussion involving myself, Gary Illyes and Marie Haynes on Twitter, I brought up Stack Overflow as a forum site that does very well, even though there are pages that are simply questions without answers.

@Marie_Haynes @methode @shendison What about a site like Stackoverflow? They have a ton of no-response posts & they do amazingly well in G.

— Jennifer Slegg (@jenstar) October 8, 2015

While Marie Haynes argued that it wasn’t a good example because they do have so much great content, Illyes said it comes back around to providing great content for users.

@Marie_Haynes so… you're back to my point? Overwhelm your users with great content that's created for THEM? @jenstar @shendison

— Gary Illyes (@methode) October 8, 2015

Comments

Comments can be another problematic area from a Panda perspective. Great comments show that a webpage has a lot of visitors that are engaging, but lower quality comments can drag the overall page quality down on a page.

Even when looking at inclusion into Google News, news stories can be kicked out of Google News when their news algorithms pick up on the fact there are a large number of comments on the news article, and suddenly Google News will consider it less relevant because there is so much non-news article copy on the page that dilutes the value of the actual article.

But comments can certainly be impacted by Panda depending on the type of comments and how moderated comments on the site actually are.

In a hangout with John Mueller, where Barry Schwartz was asking about SERoundtable’s Panda issues, comments was a possible issue raised by Mueller, although he did cite he did not look at the site’s issues personally.

… it also includes things like the comments, includes the things like the unique and original content that you’re putting out on your site that is being added through user generated content, all of that as well. So while I don’t really know exactly what our algorithms are looking at specifically with regards to your website, it’s something where sometimes you go through the articles and say well there is some useful information in this article that you’re sharing here, but there’s just lots of other stuff happening on the bottom of these blog posts.

When our algorithms look at these pages, in an aggregated way across the whole page, then that’s something where they might say well, this is a lot of content that is unique to this page, but it’s not really high quality content that we want to promote in a very visible way. That’s something where I could imagine that maybe there’s something you could do, otherwise it’s really tricky I guess to look at specific changes you can do when it comes to our quality algorithms.

Schwartz questioned whether he needed to look at the comments specifically, so Mueller clarified further.

Well, I think you need to look at the pages in an overall way, you should look at the pages and say, actually we see this a lot in the forums for example, people will say “my text is unique, you can copy and paste it and it’s unique to my website.” But that doesn’t make this website page a high quality page. So things like the overall design, how it comes across, how it looks like an authority, this information that is in general to webpage, to website, that’s things that all come together.

But also things like comments where webmasters might say “this is user generated content, I’m not responsible for what people are posting on my website,” when we look at a page, overall, or when a user looks at a page, we see these comments as part of the content design as well. So it is something that kind of all combines to something that users see, that our algorithms see overall as part of the content that you’re publishing.

Disqus has settings that allow you not to output comments in HTML and there is a setting in WordPress to allow pagination for comments, so you don’t end up with dozens or hundreds of comments on a page that can dilute the quality of the actual article.

Comments being a positive signal

That being said, great comments can be great from a Panda perspective. If the comments are good, they add to the quality content on the page, they show user engagement and can reflect how popular a website really is.

In a Hangout in 2014, John Mueller said great comments can be a positive thing for a website.

That’s something where we essentially try to treat these comments as part of your content. So if these comments bring useful information in addition to the content that you’ve provided also on these pages, then that could be a really good addition to your website. It could really increase the value of your website overall. If the comments show that there’s a really engaged community behind there that encourages new users when they go to these pages to also comment, to go back directly to these pages, to recommend these pages to their friends, that could also be a really good thing.

Spam or Flame War Type Comments

John also says that bad comments can definitely impact a site negatively too.

If you have comments on your site, and you just let them run wild, you don’t moderate them, they’re filled with spammers or with people who are kind of just abusing each other for no good reason, then that’s something that might kind of pull down the overall quality of your website where users when they go to those pages might say, well, there’s some good content on top here, but this whole bottom part of the page, this is really trash. I don’t want to be involved with the website that actively encourages this kind of behavior or that actively promotes this kind of content. And that’s something where we might see that on a site level, as well.

When our quality algorithms go to your website, and they see that there’s some good content here on this page, but there’s some really bad or kind of low quality content on the bottom part of the page, then we kind of have to make a judgment call on these pages themselves and say, well, some good, some bad. Is this overwhelmingly bad? Is this overwhelmingly good? Where do we draw the line?

If, on the other hand, these comments are kind of low quality, un-moderated, spam, or abusive comments just going back and forth, then that might be something you’d want to block. Maybe it’s even worth adding moderation to those comment widgets and kind of making sure that those kind of comments don’t even get associated with your website in the first place.

This is why it makes so much sense to have a strong comment policy and moderate comments. Having a negative reputation in your market area for the bad comments is not a good thing.

Comments Affecting Entire Sites

Mueller also confirms that comments on a site, especially low quality ones, can definitely impact the quality of an overall site, meaning that it could technically impact pages on a site that don’t even have comments on them.

And we do that across the whole website to kind of figure out where we see the quality of this website. And that’s something that could definitely be affecting your website overall in the search results. So if you really work to make sure that these comments are really high quality content, that they bring value, engagement into your pages, then that’s fantastic. That’s something that I think you should definitely make it so that search engines can pick that up on.

Removing all comments

Another recent trend we have been seeing is experts who are recommending removing comments completely as a Panda fix. While some are making the change simply due to moderation issues, especially the time that it takes to effectively moderate comments on a popular site, some are doing it for Panda reasons.

The reasoning behind the removal is that sites no longer have to worry about comments dragging down the content’s quality. But the downside of this is that removing or blocking all comments is that you are missing a few important signals. First, user engagement, since great quality comments can show that the content of the article is important and relevant. And second, it can lessen the value of the article, especially for the many sites where people would visit repeatedly to see updated comments on an interesting article.

It also can be detrimental to a site since a searcher who ends up on the page can’t use comments as a gauge to see if the author is correct or not. Often it is the comments that will reveal that there is an issue with the content, and removing the ability to leave comments or removing comments from older content can leave users with a negative opinion, especially if they found the comments on a site previously useful.

And depending on the type of site, we have seen some comments on articles cause an article to rank for keywords. This isn’t a poor user experience because the answer is on the page, and is often in response to a question asked in the article, so it is natural for users to look to the comments.

Technical Aspects of Panda?

Panda is about content. It isn’t about technical things like how many links are pointing to the page or how fast it loads. Not that those things aren’t important, but if you go back through comments Google employees have made specifically about Panda, technical aspects have never come up. In fact, they suggest looking beyond technical issues where Panda is concerned.

In a hangout last year, John Mueller was talking to Barry Schwartz about the SERoundtable Panda problems, and Mueller said, “In general, I also wouldn’t look at it as too much of a technical issue if you are looking at Panda issues. But instead I’d look at the website overall, the webpages overall, that includes things like the design, where I think your website is pretty good compared to a lot of other sites.

That said, technical issues are an SEO component, and while not directly impacting Panda, they certainly can cause issues as Panda can and many are affected by Google’s other core ranking signals.

In fact, multiple times in the Google Webmaster Help forums, Mueller has redirected webmasters from looking at technical issues and to focus instead on the content and overall value of the site.

Page Speed

Because there is a lot of talk about page speed and the potential mobile friendly signal, something Gary Illyes has mentioned numerous times since the introduction of the original mobile friendly algo, some people don’t realize that page speed already is a ranking signal.

Google introduced page speed as a signal back in 2010, and it predates Panda by almost a year.

How much of a signal is it? Not a huge one in comparison to other signals such as links, content, and relevance.

User engagement

User engagement is another popular myth about Panda, but there’s been no evidence Google has ever used engagement in any form as a Panda signal. Instead, many assume it must be a signal because it could show whether users are liking and engaging with the content as a quality signal.

The only possible user engagement “signal” Panda could be using is based on comments, which is again another reason this recent “remove comments” trend could hurt sites.

TLD advantages

While some TLDs tend to have more spam than others, Panda doesn’t look at content on sites differently based on what TLD they are on. In fact, Google has publicly said multiple times that Google does not give any ranking advantage or disadvantage to sites simply based upon the TLD it is located on.

Just over a year ago, John Mueller reminded SEOs of a post Matt Cutts made previously that said Google does not favor any TLD, and specifically mentioning new TLDs, which some domain registrars spam as having an advantage in Google search.

It feels like it’s time to reshare this again. There still is no inherent ranking advantage to using the new TLDs.

They can perform well in search, just like any other TLD can perform well in search. They give you an opportunity to pick a name that better matches your web-presence. If you see posts claiming that early data suggests they’re doing well, keep in mind that’s this is not due to any artificial advantage in search: you can make a fantastic website that performs well in search on any TLD.

More recently, the debunked it on the Google Webmaster blog and in a tweet by Gary Illyes last month.

What It All Means

For those webmasters who feel they are being impacted by Panda, it is very important you don’t make the wrong SEO decisions that can actually make your Google search situation even worse than it is. With the slow roll out of Panda, it is harder to definitively say whether an issue is Panda or not, so it makes a lot of sense to ensure the steps you take are made for the right reasons.

If you fear the issue is comments, look at moderating the low quality ones rather than removing them completely, which can hurt your site visitors who like to see comments others have about an article. Or look into other on-page solutions to diminish their impact, such as pagination of comments.

User generated content can be valuable for Panda, but again, like any content, it is quality not quantity that matters, so ensure any UGC you have is of the highest quality, or noindex it until it is.

Always be wary of removing any content for a “Panda fix” without thoroughly investigating what is really going on as far as Google is concerned. You don’t want to remove pages you think are low quality when Google is actually sending traffic to those pages. Or better yet, continue adding great content so that the number of lower quality pages you think you might have is diluted.

Go easy on any ads or affiliate links. It is always better to slowly add them in than to keep creating new pieces of content loaded with ads or affiliate links. Chances are good the world doesn’t need a “top 10 gifts for toddler boys” with Amazon links to each products. And if you insist on it, at least make them so unique that you won’t find another top ten list on the web like it.

Don’t forget Panda is about content, not about technical issues. While some technical issues are in Google’s regular core ranking algorithm, simply fixing these technical issues to cure Panda won’t work. But keep creating great, unique, quality content and Panda shouldn’t be a problem.

Added: Some people didn’t get the significance of Panda now being part of the core ranking algo, so I have talked about it in more detail here.

Also, no, Panda is not real time now.

Trey Collier says

I love this post……but I think you missed one point,,,,,Google is favoring Big Brands in their algo

winter says

Hi, how do affiliate links affect a site where the site owner is the owner of the affiliate product? For example sites like Zazzle where people can create their own products and then make them for sale on Zazzle. Then they join the Zazzle affiliate program and link to their own products from their own website.

I guess my real question is, does Google understand that the site owner is just using Zazzle as a manufacturer and payment gateway and that the site owner is not just an affiliate?

Jennifer Slegg says

From a link perspective, you’d want to make sure those links don’t pass PageRank. Otherwise they could be viewed as manipulating PageRank.

Dawn Anderson says

I come back to this post often Jen. So incredibly useful, particularly the points regarding improvement rather than removal of thin content.

Jennifer Slegg says

Thanks Dawn

Neil Hannam says

Fantastic post Jennifer and im really pleased to see some of the rumoured Panda aspects cleared up. Made it compulsory reading for our SEO and content team before the end of the day.

Out of interest do you think the structure of the content is factored in by Panda? By that I mean use of heading structures (H1, H2 etc), paragraph structure, inclusion of images and breaking content into smaller sub sections. If so how do you think they gauge and measure this?

Jennifer Slegg says

Not specifically by Panda, but we do know that Google does take things like H tags into account just in the regular algo.

William Harvey says

I can’t beleive this post is nearly 12 months old and I haven’t come across it before.

I agree with every point you make and have to congratulate you for this well thought through and thorough post. There’s so much Panda misinformation out there and I’m pleased this post sets the record straight.

If it’s OK with you I’ll be directing people with content/panda issues over to this post.

Jennifer Slegg says

Thanks, and definitely okay