When seeking insights for your mobile traffic, we can often settle into the good old option of segmenting by device in the Google AdWords or BingAds interface. Sure, that can be very useful and a quick-fix, but what about a more in-depth review of the mobile impact you’re seeing in your accounts?

Google Analytics offers segmentation and aggregation in a way that provides invaluable insights when discussing performance and optimization opportunities. There are 2 mobile-centric reports that offer new layers of visibility when it comes to account performance, incorporating multiple conversion types and campaign performance.

Although some PPC accounts have a single conversion type and a single form of interaction (the online lead/purchase), many accounts incorporate other options. Maybe you have a separate conversion type such as an email sign up or live chat. Maybe you are using call tracking to grasp improved understanding of the sources of your phone calls, tracking more than just mobile performance as it affects your number one conversion action. Regardless of the action, you have more than one type of valuable engagement.

So how do you effectively pull the data for these engagement types and still gather actionable insights?

First you need to know the reports to pull from Analytics:

What this tells you, the practitioner, is that you will need to parse your data accurately to ensure you’re reading your results properly. The main goal is to get a clearer look at how your mobile traffic actually performs.

Report 1 – Conversion Types Report

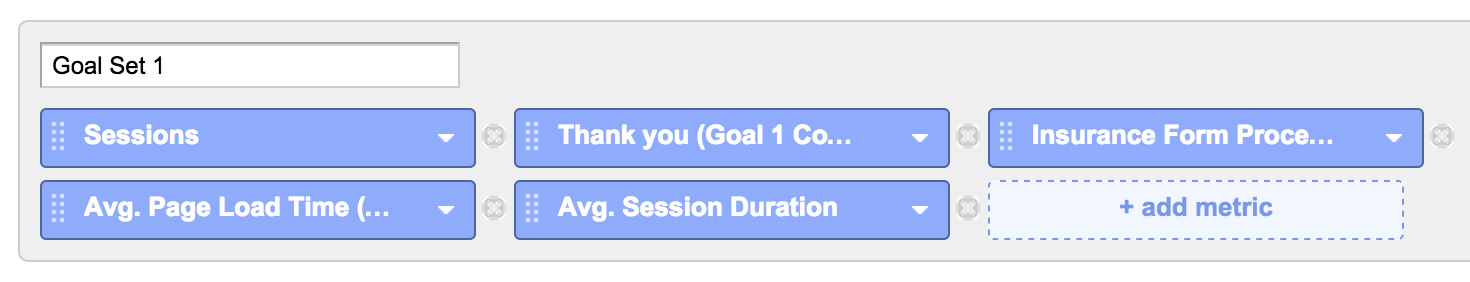

To begin, navigate to the Conversions tab of Analytics, and the Goal of your choice. I’ve set my Goal 1 as seen below:

To begin, navigate to the Conversions tab of Analytics, and the Goal of your choice. I’ve set my Goal 1 as seen below:

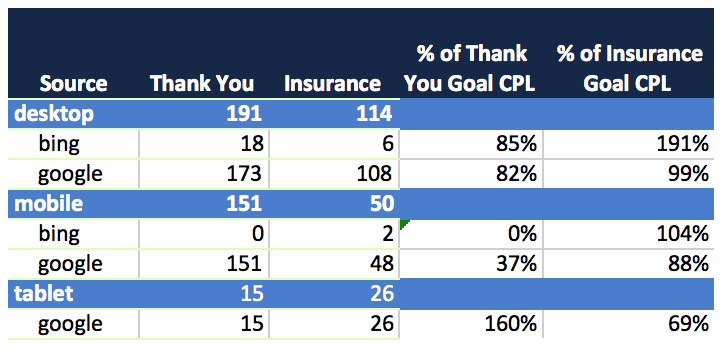

As you might notice, I have 2 separate but equally valid conversions types listed here: the generic Thank You page and the Insurance Form Processing page which indicates that a visitor completed the slightly more involved conversion form.

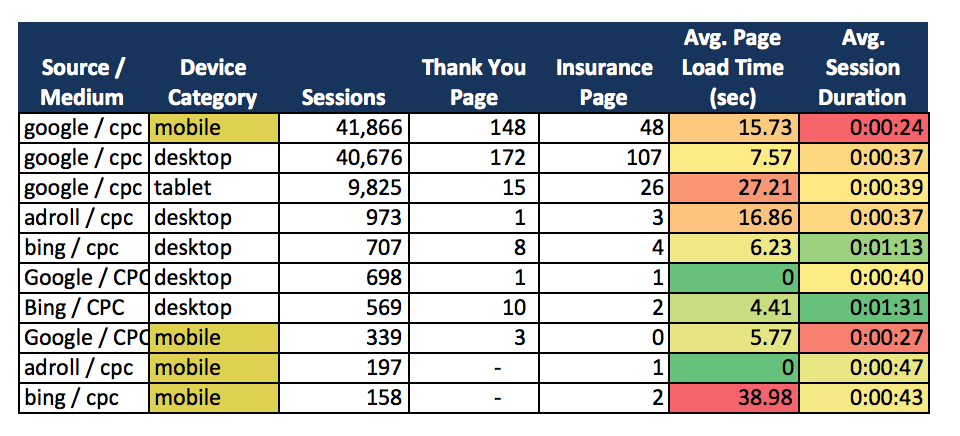

The report starts with segmenting by Paid Traffic and then by source, and finally, by device category.

By using Conditional Formatting we get a nice view of the comparative load time and session durations by device. We can visually identify which experiences are correlated with specific sources and devices.

By using Conditional Formatting we get a nice view of the comparative load time and session durations by device. We can visually identify which experiences are correlated with specific sources and devices.

Questions to ask yourself

What is the data telling me? Do my mobile visitors who convert spend less time on my site? Does load time appear to affect conversion rates?

Next Step for Report 1

The next logical step is to compare the investment being made to the outcome generated for this type of conversion. As I mentioned, these conversion types originate from different user paths, with the Insurance Page being a more qualified lead. For this reason, I want to compare not only cost-per-conversion, but also the accompanying on-site performance by device.

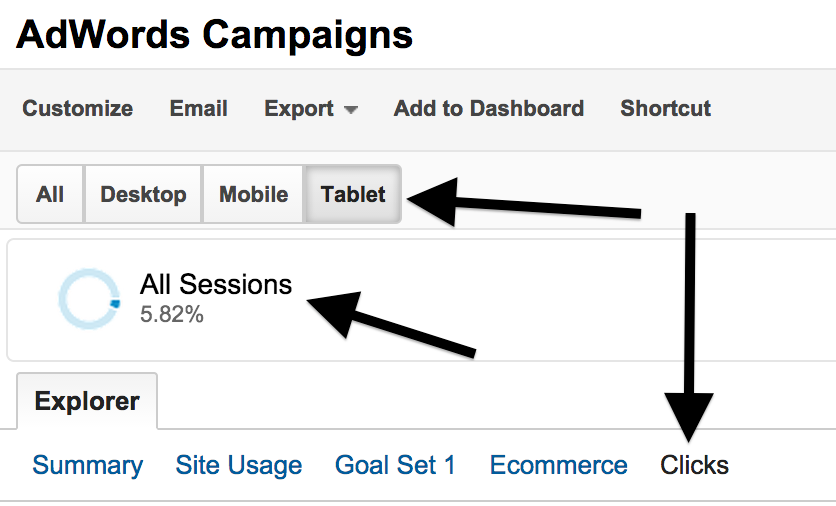

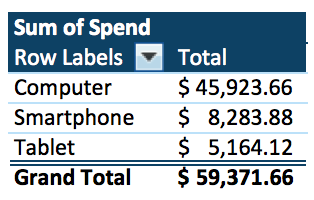

From this report, we segment the report into device-by-device performance, such as the Tablet data selected above. You can also just go with “All” and then build a quick pivot table highlighting your costs by Device and by Source.

From this report, we segment the report into device-by-device performance, such as the Tablet data selected above. You can also just go with “All” and then build a quick pivot table highlighting your costs by Device and by Source.

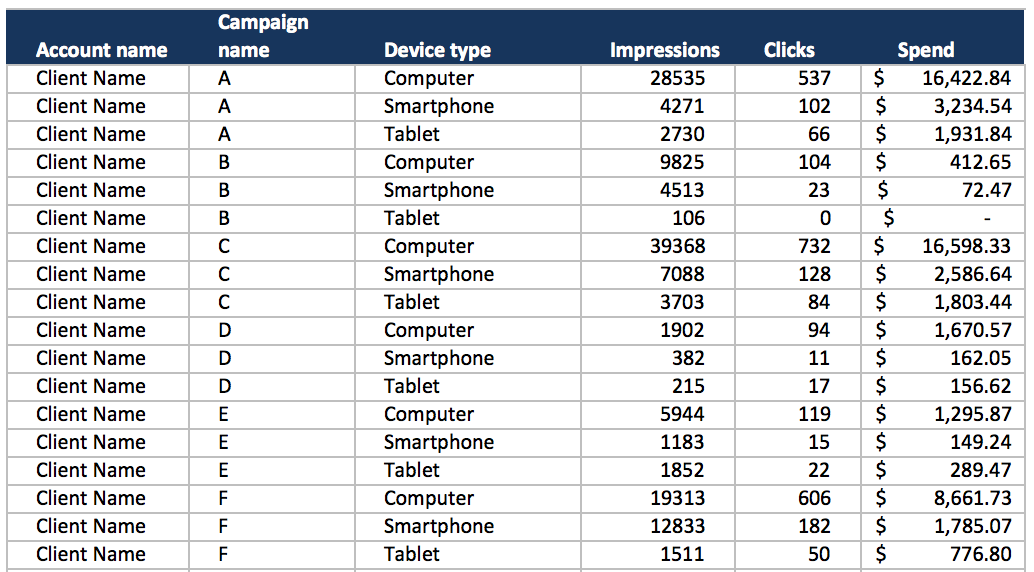

For those using Bing, I’m sorry to tell you that you’ll still need to log in to the BingAds interface and pull your own device-segmented report. This report should contain the performance column “Device type” which will get you the following:

which we will morph into the following pivot table:

which we will morph into the following pivot table:

At the end of the day, overlapping these sets of data gives you a clear picture of just what each device type is contributing to your actual back end conversion performance.

At the end of the day, overlapping these sets of data gives you a clear picture of just what each device type is contributing to your actual back end conversion performance.

In the event that one type of conversion is preferable, say Program signup over Email signup, this breakout will allow you to compare not only CPL for each device but also the corresponding engagement metrics.

In our example, the goals are associated with the spend for each source and then compared to their individual CPL goal:

Questions to ask yourself: When I look at where I’m investing my budgets, am I properly acknowledging the value of mobile devices?

Report 2 – Campaign and Device Report

A similar approach with greater takeaways involves this same type of breakdown, but by Campaign, not just device. The value in this analysis is that you are able to identify how users interact with specific networks and content by device.

This report is generated in the same fashion as the preceding report, but with the main segments as follows:

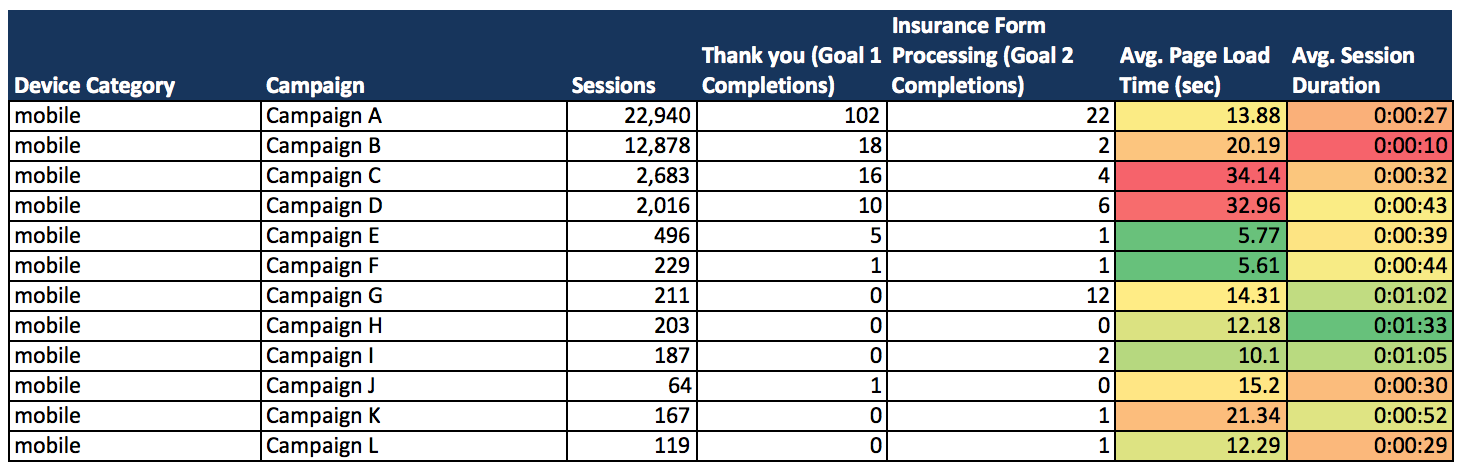

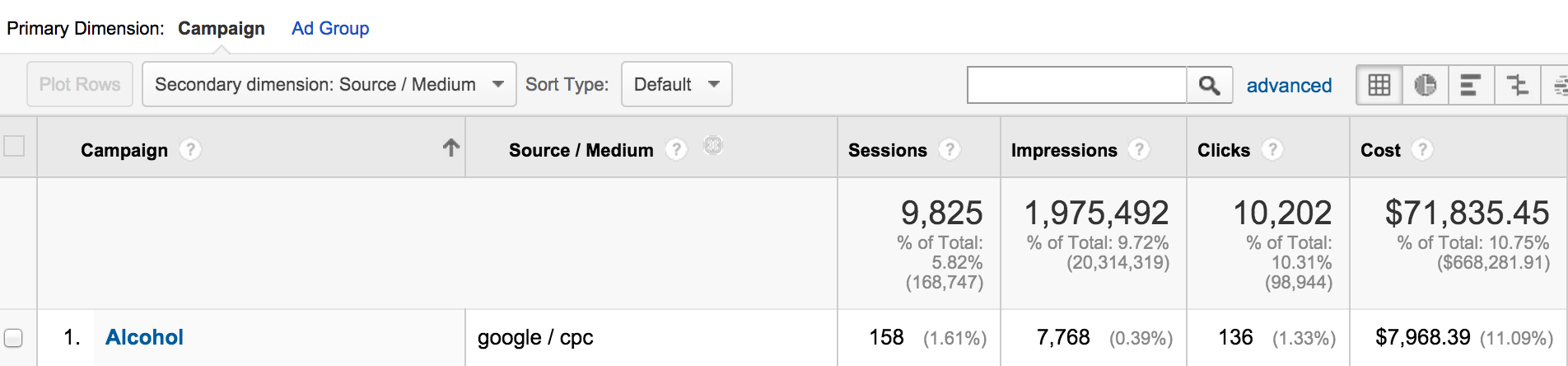

From these settings, we generate a set of data as listed below:

Upon examining this data, we can see the campaigns for which mobile is contributing most strongly.

For example, Campaign G has brought in 12 leads (5.69% session-to-conversion rate), all through the more-qualified Insurance Form completion. Campaign A did the opposite– generating almost 5x the Contact Forms (0.44% session-to-conversion rate) as Insurance Forms.

My immediate observations are that 1) the content that brings mobile users to Campaigns A, B, C, D, & G is in some way helping to qualify them as more likely to convert. Campaign B does has a lower conversion rate, as well as a noticeably shorter average session duration. Is there a reason both of these metrics coincide?

The first thought is that the site experience is poor. If the clicks from Campaign B sends to a content-heavy page or perhaps one with poor image quality, it could easily influence the visitor to leave the page, or even the site.

In the opposite vain, Campaign H has an average session duration of 0:01:33, but with no conversions. Do we blame low session volume? Or is it possible that this particular campaign is sending users to a page with a video that doesn’t effectively convince viewers to convert.

Next Step for Report 2

The follow up to this report involves looking into the value of the various campaigns’ roles in converting visitors. In reality, Campaign H actually leads back to a page that includes an index of topics that may interest a wide variety of users. The low session volume (in this case, only about 200 sessions) is due to the fact that we don’t send a lot of visitors to this page. It’s a very specific type of content and from it we see consistent re-engagement through additional paid search channels or direct visits.

Because the page itself is so text-heavy, it is no surprise that the average duration exceeds a minute. As I see the fluctuations in the relationship between mobile users who visit through this campaign and direct or branded visits, I continually adjust. Whether I shift my mobile bids or test the landing page experience on a broader level, I constantly keep a finger on the pulse of this type of engagement.

Report 3 to generate

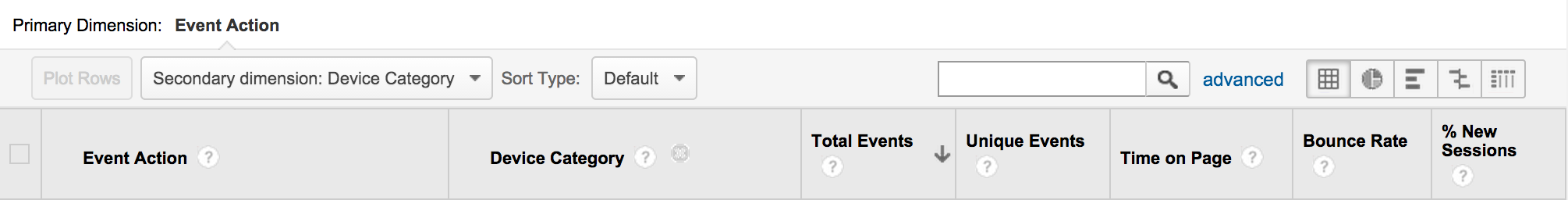

Events – what works best on mobile for multiple sources

Similar to the campaign list, once performance has been broken out by device and by event action, there’s quite a lot to look at.

For those of you not yet using events to track your various forms of engagement, let me demonstrate one of the great insights we get by doing so:

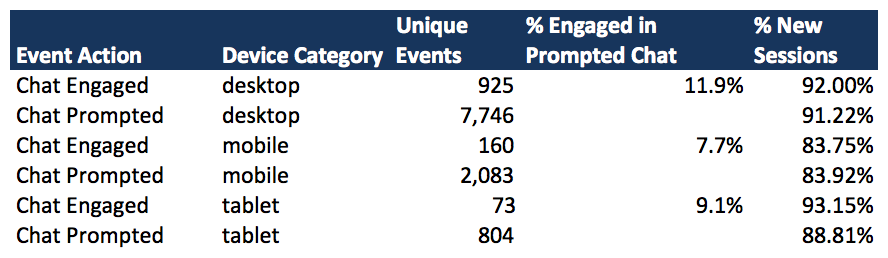

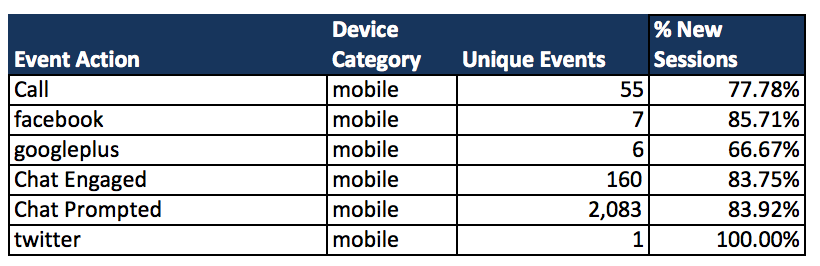

In the table above, we have broken out each type of engagement event, with particular attention to the number of prompts that occurred for our Live Chat support. Comparing the number of those unique prompts that occurred versus the number of times in which the visitor actually began a chat, it is clear that the mobile has a lower engagement rate.

In the table above, we have broken out each type of engagement event, with particular attention to the number of prompts that occurred for our Live Chat support. Comparing the number of those unique prompts that occurred versus the number of times in which the visitor actually began a chat, it is clear that the mobile has a lower engagement rate.

While this may initially tell us that we’re missing something with chat traffic that they are not as compelled to begin a conversation, let’s first delve into the entire event action list. To save ourselves time (and a wee bit of a headache), let’s remove the non-mobile traffic.

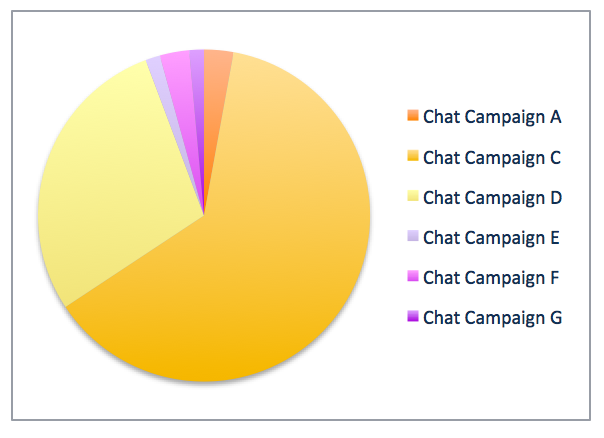

Just as we accomplished with the previous data sets, the next step is to identify what event is making up the greatest portion of your traffic. Because I can’t manually choose to which event I apportion my spend, I have to work to cultivate the traffic that executes my preferred engagement.

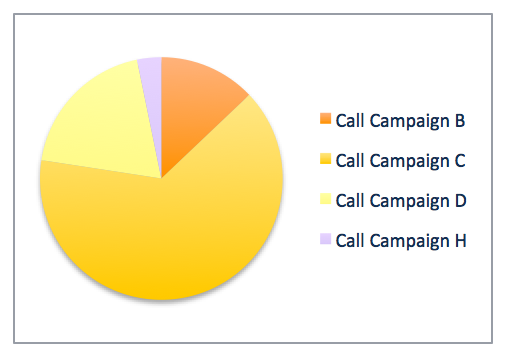

In this case, calls are the highest priority. Following calls are chats. The number of prompted chats allows me to see how frequently a visitor is greeted by a chat box and how often they opt to engage. If I pull this report with the campaigns included, I’m quickly able to identify what campaigns are having the highest engagement in my various events.

From these tables, I can clearly see where I’m getting my highest mobile contribution for Chats and for Calls. While Campaign C and D may not have the most online leads, they certainly are strong contributors to my call volume. Likewise, these campaigns hold their own when it comes to online chats, with a larger variety of campaigns generating online chats.

As I decide how I bid on mobile and allocate the budget for my account, this kind of segmentation lends visibility to far reaching affects of my PPC campaigns.

Conclusion

While the reports in this article are specific to the daily PPC life I lead, the approach is one that each practitioner can implement at their own individual levels. You may not have a live chat function, but you may have a video for your visitors to learn more about you. If viewing the video is an important metric for you, it looks like you’ve got a good start already. When breaking down your engagement my device, a whole world of insights and understanding reveals itself to you.

Identifying all the hidden gems in Google Analytics is like picking up shells on the beach. There are so many, you could spend days sifting through them, constantly finding new things. The goal here it so find the shells, or reports in this case, that matter the most to you.

[…] Revealing Mobile’s True Impact With Google Analytics, The SEM Post […]