Even seasoned SEOs can sometimes stop and wonder “Did I do that robots.txt right?”, especially when dealing with more complicated robots.txt files that might disallow certain directories or certain bots. There are a few robots.txt checkers out there,but sometimes even the responses can be confusing for new webmasters. Fortunately Google has just released a new tool to make that all much easier.

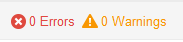

Google has released a brand new Robots.txt Tester right in Google Webmaster Tools. The tester will check your syntax in your robots.txt file, showing your errors and warnings related to your robots.txt file.

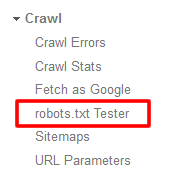

The new checker can be found in the Crawl section of Google Webmaster Tools.

It will also allow you to edit the file on the fly so you can check it as you work on it. There is also a link that allows you to look at the current robots.txt file that is live for that site.

And best of all, they have added a new quick form to the bottom that allows you to easily disallow the most common Googlebots from either your entire site or on a directory or page level. You can disallow Googlebot, Googlebot-News, Googlebot-Image, Googlebot-Video, Googlebot-Mobile, Mediapartners-Google and Adsbot-Google. You cannot use it to add other non-Google bots directly with the form however.

This is a great addition to Webmaster Tools, especially for those webmasters who know just enough about robots.txt to get themselves (and the index-ability of their sites!) in trouble.

Jennifer Slegg

Latest posts by Jennifer Slegg (see all)

- 2022 Update for Google Quality Rater Guidelines – Big YMYL Updates - August 1, 2022

- Google Quality Rater Guidelines: The Low Quality 2021 Update - October 19, 2021

- Rethinking Affiliate Sites With Google’s Product Review Update - April 23, 2021

- New Google Quality Rater Guidelines, Update Adds Emphasis on Needs Met - October 16, 2020

- Google Updates Experiment Statistics for Quality Raters - October 6, 2020