Google Search Console has begun sending out a brand new warning to webmasters who are blocking CSS and JavaScript on their websites.

Google Search Console has begun sending out a brand new warning to webmasters who are blocking CSS and JavaScript on their websites.

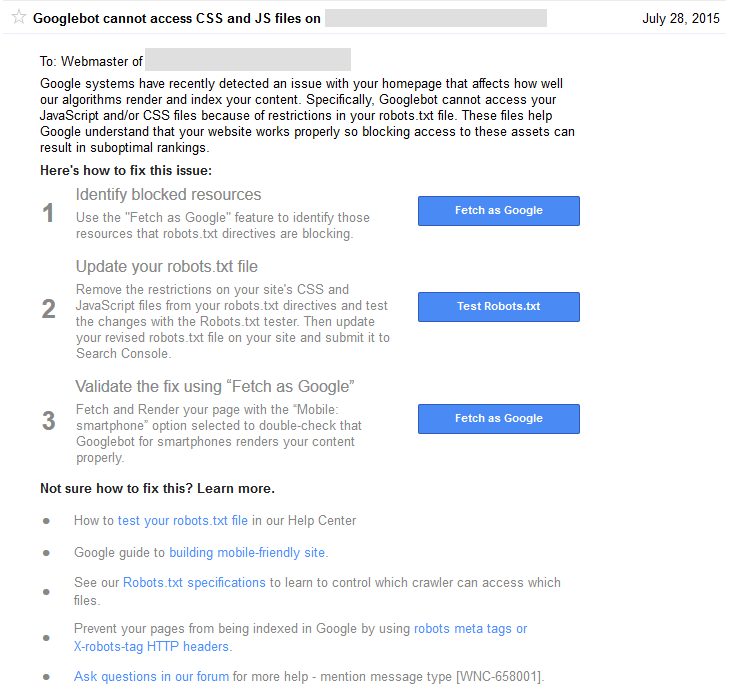

The new warning, also referred to as [WNC-658001] is send by both email and as an alert in Google Search Console for affected websites.

Googlebot cannot access CSS and JS files on _____

Google systems have recently detected an issue with your homepage that affects how well our algorithms render and index your content. Specifically, Googlebot cannot access your JavaScript and/or CSS files because of restrictions in your robots.txt file. These files help Google understand that your website works properly so blocking access to these assets can result in suboptimal rankings.

Here is a copy of the notice in Google Search Console.

The warning also states emphatically that blocking Javascript and/or CSS “can result in suboptimal rankings.” While Google has been making it known that they must be unblocked as part of the mobile friendly algo, they did also make the changes to the Google technical guidelines advising that it can hurt all rankings last year.

The warning also states emphatically that blocking Javascript and/or CSS “can result in suboptimal rankings.” While Google has been making it known that they must be unblocked as part of the mobile friendly algo, they did also make the changes to the Google technical guidelines advising that it can hurt all rankings last year.

If you need to know which resources are blocked, here is how to find them.

The blocked resources also show up in the “Blocked Resources” section under “Google Index” in Google Search Console. However, Michael Gray tweeted that he was getting the notice for resources that were not blocked.

The message also outlines details of how to fix the issue, likely to help those who have no idea what it means to block those resources and do not realize it is causing an issue.

Google has been increasing the number of messages they send to webmasters, alerting them to site issues that could negatively impact their rankings.

I have asked to see if there is information on how many webmasters received this warning, and will update if more becomes known.

Added: It looks like many are getting warnings from having “Disallow: /wp-content/plugins/” which can be blocked with some WordPress setups. If you use Yoast SEO, you can find the robots.txt in its new location here: SEO / Tools / Files.

Some are getting alerts for 3rd party resources that are blocked, however, Google has previously said 3rd party resources are not an issue since webmasters since they are generally outside of the webmaster’s control.

You can also follow us @Jenstar and @TheSEMPost as we most more updates.

Update 12:30pm PST: Some people weren’t aware there is a much easier way to find blocked resources than the “Fetch as Google” option for every page that Google suggests doing. Do double check, as some people who are reporting false positives actually discover they did have some resources blocked that they weren’t aware of. How to Find Blocked CSS & Javascript in Google Search Console.

Jennifer Slegg

Latest posts by Jennifer Slegg (see all)

- 2022 Update for Google Quality Rater Guidelines – Big YMYL Updates - August 1, 2022

- Google Quality Rater Guidelines: The Low Quality 2021 Update - October 19, 2021

- Rethinking Affiliate Sites With Google’s Product Review Update - April 23, 2021

- New Google Quality Rater Guidelines, Update Adds Emphasis on Needs Met - October 16, 2020

- Google Updates Experiment Statistics for Quality Raters - October 6, 2020

John says

So I have tried numerous configurations of robots.tx to no avail. However, I disabled the wordpress plugin Quick Adsense and all renders OK. Could someone who knows how to code look into the issue and maybe post a fix ? To be more specific this is what showed in the list after I tried to render the page before deactivating Quick Adsense. “http ://page ad2.googlesyndication. com/pagead/js/ adsbygoogle.js ” After deactivating Quick Adsense the page rendered OK

Edited to break link.

I have contacted BuySellAds which appears to be the owner of the plugin. Their response is that they no longer support the plugin.

*Please note this robots.txt did not solve the problem.

My current robots.txt:

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Allow: /wp-content/themes/

Allow: /wp-content/plugins/

Allow: /wp-content/uploads/

Allow: /wp-includes/css/

Allow: /wp-includes/js/

Allow: /wp-includes/images/

User-Agent: Googlebot

Allow: .js

Allow: .css

Allow: /*.js*

Allow: /*.css*

Allow: /wp-admin/

Sitemap: http://mywebsitena me. com/sitemapindex.xml

fc says

When I fetch and render it is showing partial. It looks fine. The same way google sees it is the same way visitors see it.

The reason for this is that I’ve added buysellad codes in a widget onto my sidebar and footer. When I remove it it’s perfectly fine. I’m not blocking anything in my robots.txt. Is it normal that google can’t access external scripts? Please I don’t want to get penalized or I don’t want my rank to drop? What do I do? Do i leave the buysellad codes?

S.N I’m using the thesis promo theme.

Briantist (post-operative...) says

Fixed this on ask my many sites!

Was missing robots.txt AND Amazon Cloud front being set to Us/ Europe. Just set it to worldwide and Google Webmaster tools says everything is OK.