There has been a great deal in the news recently about the role that fake news could have played in the US elections. Some are calling on Google to take a stand against fake news and remove it completely from the search results.

There has been a great deal in the news recently about the role that fake news could have played in the US elections. Some are calling on Google to take a stand against fake news and remove it completely from the search results.

What many don’t think about, however, are the huge repercussions if Google was to be the judge and jury on deciding what content to keep and what to remove, especially when there are split opinions on what side is fact and what is fiction. Do we want one company to decide this for all searchers?

Why is this even an issue?

Earlier this week, The Guardian published an editorial news piece showing where Google was returning results that didn’t support the historically supported, recognized, and proven facts for a specific historical event, in this case, the Holocaust.

The journalist noted that when doing a search for “Did the Holocaust happen” would include search results stating that the Holocaust did not happen, something more commonly referred to by historians as the Holocaust denial. Yes, Google is returning results that do include Holocaust denial results, along with ones supporting that the Holocaust did exist. And while many do not agree with the results in this case, there are a lot of things wrong with using this particular query as the basis for an overall call for Google to scrub their results of these types of fake news and unpopular opinions from the search results.

Are people actually searching for “Did the Holocaust happen?”

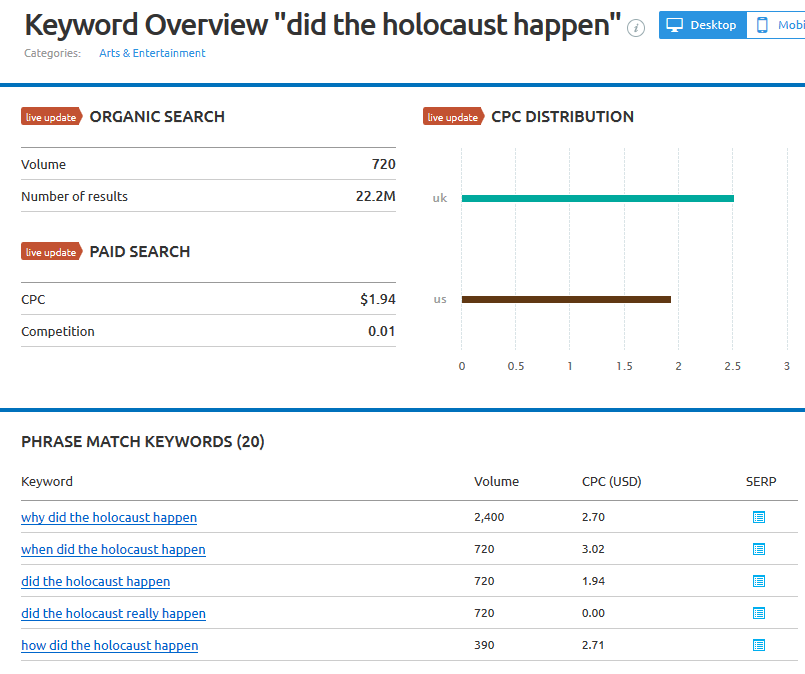

First, the search volume for this search query is incredibly low. It is not something that is regularly searched for on a monthly basis.

Yes, this means only 720 individual searches for this search term happen per month. But look at the other queries that also have these exact keywords as well.

- When did the Holocaust happen

- When did the Holocaust happen

- Did the Holocaust really happen

- How did the Holocaust happen

They also include words that have nothing to do with people looking for information about whether the Holocaust was a hoax or not, but rather are typical searches that students would do when studying the Holocaust and writing a paper about it.

But there are very few people actually doing this search, a mere 720 individual searches out of the 10+ billion of searches done per month.

Delivering the top search results

When you get deeper into the search results, you also start seeing results more specific to the alternate queries that happen to share the same keywords. So while Google is serving 12 million results for that query, many results are not exactly on “did the Holocaust happen?”

But certainly, the top results are skewed towards whether the Holocaust happened or not because that is the actual and very specific search query of the searcher, so Google is attempting to give the searcher what they are looking for.

Sometimes people want to find the fake news version

When I was doing research into fake news, one of the tools I used was Google to find footprints of one of the fake news sites. And i also used Google to find the “original” source of another fake news story that was being shared. And if Google had removed those simply for being fake news sites, I would not have been able to find them nor used them to debunk.

And if someone is wanting to debunk a fake news story or a myth, especially if it was something they were told about rather than having a shared article, they do need to be able to find the story they want to debunk. Again, if Google had removed it, then the author who was wanting to write the story about why that story is fake or wrong, it becomes much more difficult if they cannot find the original.

The same also applies to scholars who are doing research papers into this topic, which could hurt academia.

And, as we have seen in the case of Snopes, sometimes those debunking sites can even outrank the original story they are debunking, simply because they pull relevant quotes people might search for and include it in the article.

Why is balance missing?

When you investigate even further, there just isn’t that much content that websites have created to debunk the “Did the Holocaust happen” content. Is it Google’s fault that they don’t have more results to choose from, so they can show a more balanced results page? If content creators aren’t creating the type of content that could rank for it, then there’s no way for Google to show the bigger picture to those searchers.

When you look at YouTube specifically, there’s an even greater skew on the available content. When you do that search, nearly every single video is people perpetuating that the Holocaust was a hoax. Again, people aren’t creating videos about why the Holocaust wasn’t a hoax, only that it is.

Bing & DuckDuckGo

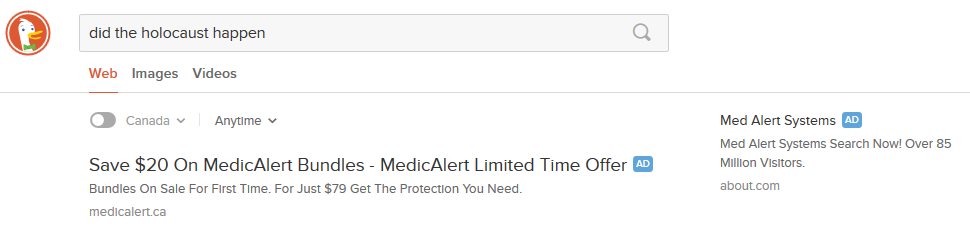

And the results issue just specific to Google. If you look at Bing’s results and DuckDuckGo’s results, they both have the same problem with their search results. And in fact,DuckDuckGo also tries to monetize those searches by showing two ads above the search results, for medical alert bracelets – neither Google nor Bing attempt to monetize those queries.

Content creation solution

The solution for this is very simple. Websites that have content related to the Holocaust, content that proves the Holocaust did indeed happen, need to create articles debunking “the Holocaust is a hoax” content that is front and center in the “did the Holocaust happen” type of searches that lead to content Holocaust deniers are pushing.

Wikipedia has an excellent page about Holocaust deniers which ranks well in all three search engines, but no other mainstream new site or historical site is publishing their own similar content to debunk it at the time that The Guardian wrote their story on December 11th. And the same is even more evident in videos.

Content creation effects

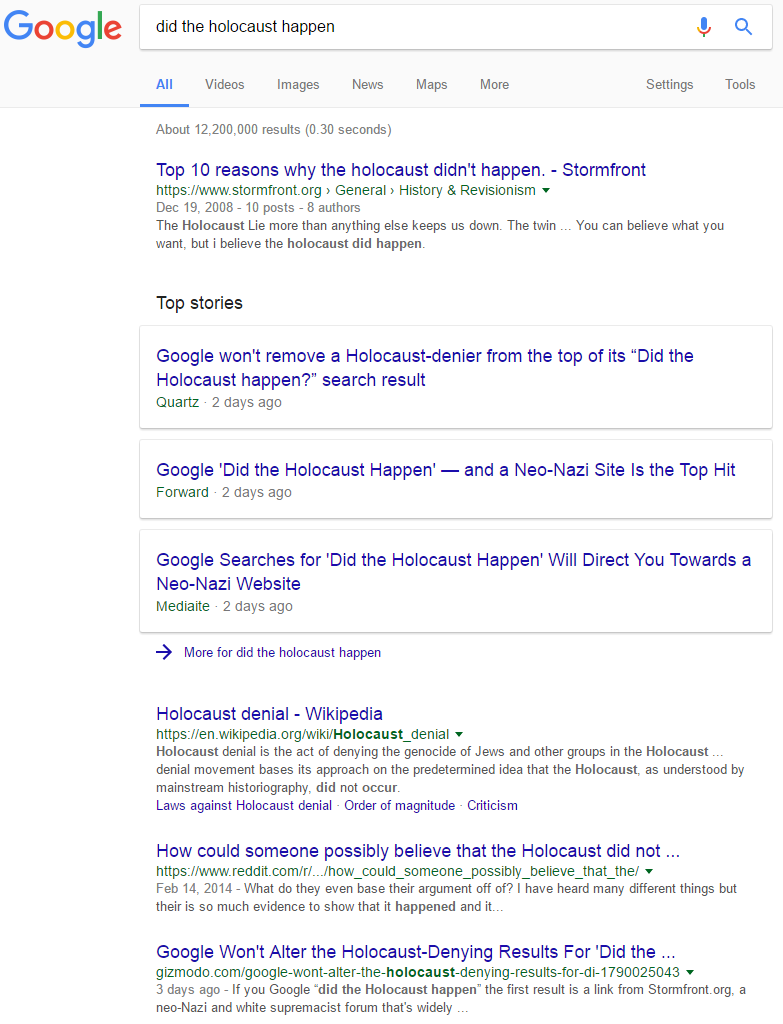

This is already happening. As more news sites are writing about it, it is adding their voices to the particular search results edging out some of the Holocaust denier type pages. Now there is significant content in the new section in the search results as well as in the organic results.

Here is what those search results look like today:

This reinforces the fact that if people are creating content to support one side or the other in a much more significant quantity than the other, then Google will naturally surface that content. As long as the majority of the available pages are on one side of whatever debate, then those will be the pages that are predominant in the search results.

More subjective content

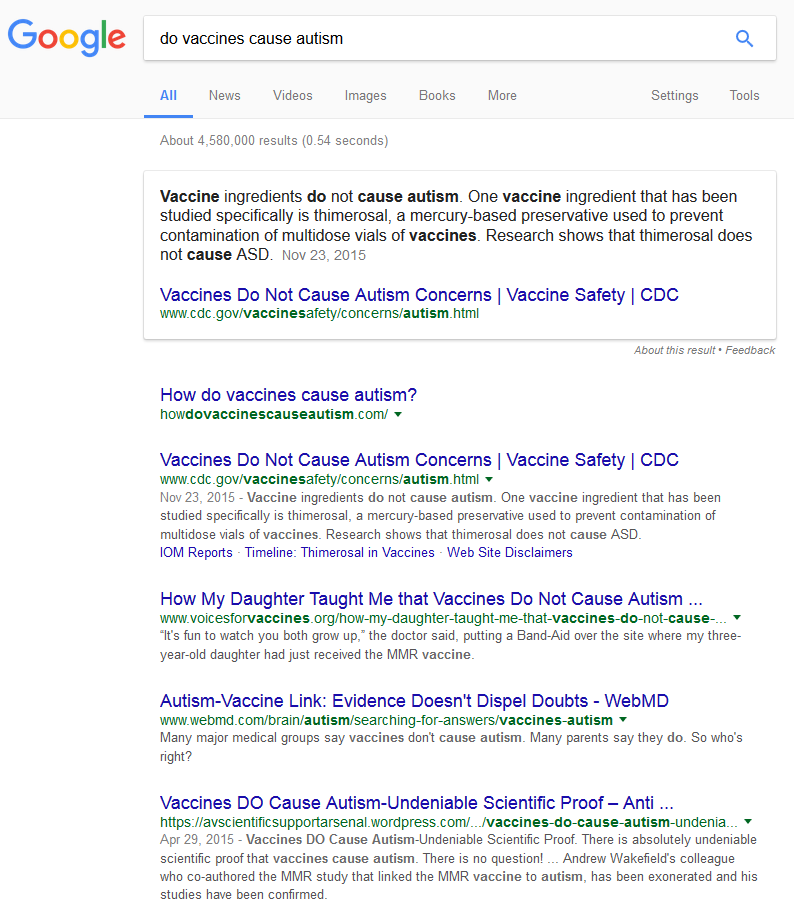

Let’s look at another example, this time the link between autism and vaccines. When you do searches for these queries, you find a much more balanced representation in the search results. It doesn’t skew heavily one side or the other, and in fact has the addition of a featured snippet on it.

But why is this showing a more balanced balanced result when compared to the Holocaust hoax example, including content specifically debunking the link? Because sites – particularly sites with high authority in the search results such as the CDC and WebMD, are actively creating content that either debunks the link between autism and vaccines or gives a balanced view of both sides of the issue.

Do we REALLY want Google to remove?

But there still is the demand by many where they say that Google should be removing results that are not true. But do we really want Google – or anyone else for that matter – deciding what is truth and what should be removed for being false? Once a search engine begins removing results, either entire websites or pages, or sanitizing the search results for specific search queries, we are no longer seeing in algorithmically balanced search results. Instead, we are seeing what one particular company wants us to see.

Lessons from Right to be Forgotten

And we have seen the repercussions of Google removing search results through Right to be Forgotten in the EU. This means that criminals, politicians, or anyone can whitewash their backgrounds of unfavorable search results, including news stories. And as we have seen, this even includes instances where convicted criminals are getting new stories related to child sex crime convictions removed from the search results. And while some people embrace this, there are many people upset that a few people can essentially rewrite history through Right to be Forgotten.

Right now, Right to be Forgotten is only applied to searches done from within the EU. So anyone outside of the EU can still see the search results, although the EU is fighting to have these results removed worldwide.

Government interference in search results

Now let’s look at it from a different perspective. What if governments want search results scrubbed of negative political new stories or get articles about historical events that don’t prescribe to their version of the truth be removed?

For instance, what if China demands that Google remove all pages having to do with the Tiananmen Square events of 1989 from all search results worldwide? What if the US government wants to see all results related to climate change removed? What if Donald Trump, once he becomes president, demands that all new stories that portray him in a negative light be removed.

It is a very, very slippery slope once a search engine starts removing search results for any reason, based on the beliefs of anyone, whether that is individuals, governments or companies.

One person’s truth…

Also, like it or not, some people truly believe that some of these hoaxes and myths are the reality. And this also traverses countries where one country sees history one way yet another sees it in a completely different light. There would be a huge outcry from those groups, which would likely result in even more media attention on their views, if Google or any search engine removed their pages for these reasons.

The ability to find results from both sides of any issue is what helps people come to an educated conclusion about whatever it is, whether it is the autism link to vaccines, or the Holocaust being a hoax. Should Google really be deciding which view is the right one? Or bending to government opinion on what view that is?

Getting it wrong

Then there’s always the possibility of Google getting it wrong.

What if Google was removing pages from the search results for being fake news and they got it wrong and removed something legitimate that should be in the search results? There would also be a huge outcry that Google was trying to skew the search results one way or the other.

What is best for the searcher?

And what about from a searcher’s perspective? Would they feel comfortable using a search engine where they know that that search engine is deciding what is truth and what is not? Or that they are bowing to governments that decide what is truth and what is not? While some might say that they would love to see Google removing things like Holocaust denier pages, where does that stop? And how could they algorithmically decide what particular topics would be subject to this whitewashing, and if so, what is the opinion that should be judged as truth?

Essentially this would be Google censoring part of the web. This happens already in China, with the Great Firewall of China, where those searching from within China can only find content that is government approved. And required censorship was one of the reasons Google left China in 2010, although before it did so, it lifted the content restrictions by redirecting searchers to the Hong Kong version of Google, which was then made inaccessible to most users in China.

What about Google News?

Google News is a more interesting topic when it comes to this, because Google does have a more stringent acceptance for sites to be included in Google News results. It could remove sites that repeatedly post fake news stories. But for the popular fake news stories that went viral on Facebook about the election, most of those sites were not included in Google News anyway.

When looking at Google News specifically, the only news related content about the Holocaust are news stories about this specific issue. When looking at the autism and vaccines news stories, the ones that confirm a link seem to be all labeled with “(blog)”, which makes one more wary of the content, or political news stories about Trump and his anti-vaccine views. There is even a news entry from Snopes.com

When does Google remove content or sites?

Right now Google only remove pages in the organic (regular) search results under very specific circumstances.

- Because the site was spamming the search results. And those sites that have been manually removed from Google get notifications about it as well as information on what they need to do in order to see their sites return to the search results.

- Pages in the search results that are removed through a valid DMCA request due to copyright infringement.

- Accidental leaks of things like credit card numbers and banking information, that sometimes happens through database leaks or hacks. Google will not remove content simply because it has date of birth, addresses or phone numbers, but it needs to be specific information that puts the person at risk for identity theft and fraud.

- Child sexual abuse imagery

- Revenge porn, where nude or sexually explicit photos or videos are shared without consent

But should Google be the decision-maker on what is true and what is not? Perhaps we should be doing more to encouraging websites and content creators to write more Snopes-like content that disproves some of these fake news stories or hoaxes, so they too rank when people do these types of searches on Google or any search engine.

Jennifer Slegg

Latest posts by Jennifer Slegg (see all)

- 2022 Update for Google Quality Rater Guidelines – Big YMYL Updates - August 1, 2022

- Google Quality Rater Guidelines: The Low Quality 2021 Update - October 19, 2021

- Rethinking Affiliate Sites With Google’s Product Review Update - April 23, 2021

- New Google Quality Rater Guidelines, Update Adds Emphasis on Needs Met - October 16, 2020

- Google Updates Experiment Statistics for Quality Raters - October 6, 2020

Kurt Henninger says

I agree here…..editorializing Search results is a very slippery slope. It’s best to leave it up to the algo and keep tweaking the algo and improving it.

Who decides what is correct and incorrect then? Serps generally use crowdsourcing (in a very general sense) as a means to determine what is the best answer and what isn’t. It’s best to stick to that.

Stoney says

I completely agree. Not only is there issue with what is “fake” vs. real, but many things that some have determined to be fake are only so because it doesn’t coincide with the point of view they want to have. And I’m not talking regular Joe’s here, I’m talking media and political elites. We cannot let them produce a narrative that says, “all dissenting opinions are ‘fake'”.

It would be an interesting study to see how many times something was deemed “fake” and was later proven true. I’m sure it’s a small slice of the pie, but here, small is hugely relevant.

Jennifer Slegg says

True. I suspect that with Facebook’s new fake news changes, with political stories – especially with the political issues in the US right now – that each side will try and get stories that show the other side favorably as being flagged for fake news.

TJ Kelly says

I see your point Jen, but I don’t agree 100%. Perhaps removing entirely is too bold a step, but there is still some responsibility on Google’s part to present accurate information.

This is why legitimate publications have journalistic standards, mostly around fact-checking. It’s irresponsible for major information distributors to disseminate inaccurate info.

Since a wholesale scrubbing might be overkill, given the nature of a *search* engine (as opposed to a publication), I’d propose a message akin to the “omitted very similar” you get during a site: query— http://i.imgur.com/K0T76cj.png

That way, users who want those results can see them, but most folks will skip right over them without being unintentionally swayed by false/incomplete/misleading information.

Jennifer Slegg says

So where do you draw the line of what to remove and what not to? And on what topics? What what about pages that discuss both points of view on whatever controversial topic you are looking at? And when government views differ on what is true, it becomes even more complicated.

TJ Kelly says

Thanks for your reply, Jen.

Controversy isn’t the issue. Veracity is. And for that, publications have been practicing fact-checking for decades or longer.

The biggest issue raised in your response is “who decides?” They should crowdsource it. Anyone can flag a result as false/inaccurate, etc. Flagging something would submit the URL for review. Then leave the fact-checking to the professionals.

In my mind, it would work like Reddit. The more flags (downvotes) a result receives, the higher priority it would become for fact checkers.

This is exactly the strategy Facebook is working on—http://arstechnica.com/business/2016/12/facebook-will-outsource-fact-checking-to-fight-fake-news/

When Facebook stories get flagged, they’ll be reviewed by members of the International Fact-Checking Network— http://www.poynter.org/fact-checkers-code-of-principles/ (list of participating organizations at the bottom of that page).

Google could very easily implement the same strategy.

And, in addition to the above, Google is already working on baking this into the algo—https://www.newscientist.com/article/mg22530102.600-google-wants-to-rank-websites-based-on-facts-not-links#.VPOFHGR4rJT

Just because some significant population of US citizens think the phrase “climate change is real” is untrue doesn’t make it untrue. It makes them wrong.

Admittedly, this is an enormous undertaking. But the algo update described in the New Scientist post would go a long way to lightening the manual load for human fact-checkers.

The whole situation would probably cause Google (and Facebook et al?) to support/contribute financially to journalistic/fact checking orgs. And that would be a major bonus for journalism as a whole.

Jennifer Slegg says

The one flaw with Facebook’s method is that it flags stories as possibly false before it gets to the fact checkers, and there is no doubt that will be exploited to suppress stories depending on what side you are on.

I think if Google can figure out how to algorithmically determine what is fake and what isn’t, it will be a win. But again, how to determine fact from fake. The climate change / climate denier example is a good one since there is so much on both sides. I think it would be awesome if Google could figure it out. But in that example specifically, especially with some of the major politicians siding with climate denier, it could made for a pretty interesting situation to see the reactions from all sides of it, if Google can.

Stoney says

“Just because some significant population of US citizens think the phrase “climate change is real” is untrue doesn’t make it untrue. It makes them wrong.”

This is what makes the whole “fake news” which hunt so dangerous We are not being told that climate change denial is “fake” news, It is, however, a valid scientific viewpoint based on multiple factors, not the least of which is actual scientific data.

I don’t need to spend anytime offering the opposing viewpoint, but the fact is that anyone who denies that there is a legitimate alternate viewpoint to climate change has bought into a political machine that has had to falsify data, intimidate scientists, and hide the fact that all of their predictions have failed to materialize. Call that what you want but most people would look at that and smell something worth digging into, however those that do often have their careers ruined for simply raising questions.

I don’t mean to be an anti-climate change advocate here, but only to make the point that the goal of those trying to suppress “fake” news are just as likely trying to suppress a viewpoint they don’t like. Truth becomes what they tell us, and any investigation into that “truth” becomes “fake news” and is therefore banned.

Should Holocaust deniers get their say? Sure. That’s more of an opportunity to present the truth. Should moon landing deniers be banned from the internet? Why? Why not just use that as an opportunity to prove it. This used to be the role of journalism, to investigate and prove. To look beyond what people are saying and investigate to find the truth behind the rhetoric. To go where the data says, and maybe in the process uncover something that has been hidden behind agendas.

One final point. One of the articles published by a major newspaper (can’t remember where I read it) that made the point on how “fake news” stole the election, didn’t address anything that was actually fake. The best case it made was that the hacked emails should not have been a factor. While I’m not advocating hacking, all they did was expose a hidden truth. It was legitimate news accessed illegally, but not in any way “fake”.

We can’t let’ those with the power determine what is real and what is not “real”. Google, Facebook, Corporations, Government, Media, they all have political bias. Just because that bias agrees with one’s own opinion of the world doesn’t make it real.

Google is not a publisher, they are an information aggregator. Their job is to aggregate information, not have publishing standards other than quality verses non-quality. To discern between correct or incorrect is the job of each individual, not the corporations.

TJ Kelly says

Agreed that flagging pre-fact check is a problem. Hopefully the flag won’t be shown to users until the fact check is complete.

And regarding the major politicians, I’m sticking to my story: facts are facts, regardless of who agrees or disagrees! 🙂

TJ Kelly says

I can’t reply to Stoney directly because the comment thread is too deep already, but this is meant as reply to both Jen and Stoney…

I think we’re mostly in agreement, but I do want to clarify a few things:

I’m not suggesting that Google “ban” or “delete” these results. Only that they be filtered out, with the option to be added back in [inb4 “filter bubble!” replies].

@Stoney, I see your point about my climate change example, but there’s an important distinction to be made here.

Perfect example.

a) No, that’s not an example of fake news. See below.

b) You call it a valid scientific viewpoint, but 97% of the world’s qualified experts disagree. BUT THAT’S TOTALLY FINE, as far as Google should be concerned.

You and I could fight about whether or not the viewpoint is valid, but that’s irrelevant to this comment thread. My initial comment on Jen’s article is not about controversy or differing opinions. Freedom of speech and all that.

c) An example of fake news is the made-up claims of human trafficking by a D.C. restaurant (as made famous by Pizzagate) or the story that an FBI agent investigating Hillary Clinton’s email server killed himself after murdering his wife. These things did not happen. They were fabricated out of thin air.

Those stories, which have no basis in reality, exist purely to sway public opinion or just to make some money in ad revenue cuz the crazier the story, the more viral it gets!

Agreed. That’s why I’m speaking up on this in the first place. Whose opinion or bias is in play should be irrelevant. The only thing that *should* matter is what’s “real.” [Thus the fact checking discussed above.]

(I might argue that this whole comment thread is contained within the definition of “quality vs. non-quality,” but to address your larger point here…)

I could not disagree more. What’s the difference, anyway?

The Wall Street Journal creates news stories and hires staff writers. Google doesn’t. But Google has infinitely more influence over how and where people get their information.

Both are informing people, and Google infinitely more so.

That influence requires some ethical restraints. See also: Facebook’s unannounced social experiments— https://www.nytimes.com/2014/06/30/technology/facebook-tinkers-with-users-emotions-in-news-feed-experiment-stirring-outcry.html

These influencers have a responsibility. It may not be a legal one (there’s no law that says Google has to be accurate), but it is an ethical one.

That is, of course, just my opinion. Because internet.

Gail Gardner says

Few realize that what they see on the first pages of search and on social media have been manipulated for years already. The new “fake” news is the latest attempt at conditioning people to ignore alternative viewpoints because calling everything you don’t want to admit a “conspiracy theory” is not working as well as it used to work.

Censorship is definitely going to get worse, but there may be a solution they can’t control. I’ve started seeing new social networks based on blockchain technology that promise privacy and to prevent censorship. We’ll see what happens.

The internet as we know it is always at risk of being taken away from us. If blockchain social networks don’t work, I suppose we’d have to go back to bbs.

Matt G. says

This is a great discussion, but I’d argue that whether Google is a publisher or an aggregator is a distinction without a difference. Both publishers and aggregators make editorial decisions and their success is reliant on the quality of their content. It’s not censorship, but rather an earnest attempt to carry out an editorial philosophy and mission.

In Google’s case, an algorithm is not a perfect and evenhanded instrument. Nor is it detached from the sorts of choices an editor of a traditional publication might make. Search engines are content curators; some links are ranked higher than others and some are omitted altogether. The flaw that fake news stories have exposed is the inadequacy of online linking and popularity (among other ranking factors) as a reliable proxy for quality.

It’s true that there are topics about which reasonable people may disagree, but it’s also true that there are agreed upon, empirical facts. Sometimes people may write factually incorrect stories for honest reasons, but many times there are nefarious intents behind them, whether they be for profit, attention, or to malign a specific group or individual. This is not an opinion based on values, nor is it a slippery slope. There are journalistic standards that have been designed and tested to root out unreliable content.

Whether a knowingly false story passes muster with an editor’s pen or a search engine’s algorithm, it’s ultimately the reputation of the entity proliferating the story that’s at stake. This may be a difficult problem to address at scale, but Google and other search engines have long relied on a team of editors to remove (or restore) listings that don’t adhere to its standards of quality. An appropriate solution is not to ask other people to try to outwit the algorithm by generating alternative stories; it’s to remove the stories you know are of low quality. To not even attempt to remedy the scourge of junk news is not a salute to free speech. Rather, it’s a reflection of a publication’s lack of integrity.