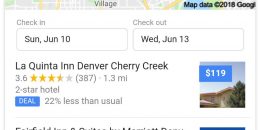

Google is testing a disambiguation box which shows up on the mobile search results for a local pack. Disambiguation features in the search results show up when Google isn’t entirely sure which meaning of a word someone is trying to search for. Here is what it looks like: Google is asking whether someone is searching […]

Google & Optimizing for Local “Near Me” Searches in Search Results

Ever since searchers started doing more Google voice searches with the additional keywords “near me”, many site owners began incorporating “near me” into their titles and content, because in theory, if someone searches for “near me”, then those pages should rank when someone does a search that includes “near me.” John Mueller addressed this and […]

Removing Sitemap Files From Google Search Console

In Google Search Console, you can add and remove sitemaps from your site, and Google will include information related to the sitemap files such as any warnings or errors, as well as pages indexed from the crawl. While Google can discover and crawl sitemaps on its own, submitting makes it faster and easier, and it […]

Why Google Won’t Give Specific Meta Description Lengths

Ever since Google dropped the length of the descriptions in the search results last week, many SEOs have been freaking out about the fact they rewrote meta description tags to 300+ characters and now, Google has dropped that back down to about 160 characters in length. The important thing to note is that Google has […]

Google Reduces Description Length in Desktop & Mobile Search Results

Two years after Google initially increased the length of descriptions in the search results, and six months after increasing it for most results, Google is reverting back to the old shorter length. For some SEOs, this means a lot of work is required to change over description meta tags from the longer version to the […]

Google & JavaScript: Googlebot’s Indexing Can Miss Metadata, Canonicals & Status Codes

At Google I/O yesterday, Google detailed some issues surrounding the use of JavaScript when it comes to both crawling and indexing content. One thing they revealed is that Googlebot will process JavaScript heavy pages in two phases – an initial crawl, and then a second one a few days later that will render the JavaScript […]

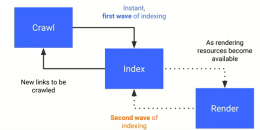

Google Indexes and Ranks JavaScript Pages in Two Waves Days Apart

Tom Greenaway from Google spoke at Google I/O yesterday about how Google handles JavaScript pages, and he mentioned something pretty significant – Googlebot treats indexing and ranking of JavaScript pages much differently than non-Javascript rendered pages. More specifically, when Googlebot crawls JavaScript sites, it does so in waves. And the result is it will have […]

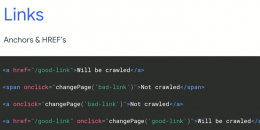

Google: Types of Links That Googlebot Will & Will Not Follow

At Google I/O yesterday, Google clarified the types of links that Googlebot will follow. While “a href” is the standard link type, they also covered various other link types and whether Googlebot would follow them or not. In particular, they clarify some uses of the JavaScript onclick attribute within links and which ones Googlebot will […]

Googlebot Still Uses Older Chrome 41 for Rendering

At yesterday’s Google I/O John Mueller revealed that Google is still using the older version of Chrome – Chrome 41 – for their official rendering by Googlebot. This means that some newer coding features available in the newer versions of Chrome and other browsers won’t be rendered properly by Googlebot. What’s not immediately clear from […]

Google: Keep Same URLs When Replacing Old Content With New

When content becomes outdated, sometimes site owners remove the old page and replace it with a new one. And they don’t always remember to use a redirect when doing so. The question came up on Twitter about whether it is best to replace the content or redirect from the old page to the new one. […]