Google has never published a list of IPs they crawl from, as those IPs can change at any time. They also have bots used for specific products as well, such as the ones for Google AdSense and Google AdWords.

One of the issues is Google the Local-aware crawling by Googlebot, which not only come from completely new IPs, but also from countries and IPs based outside of the US, which seems to be triggering false positives in bot blocking scripts. If you are unsure if the Googlebot visiting is a real one or not, you can do a reverse DNS lookup to confirm.

Why do people block bots? A variety of reasons – to block server load, to block attacks, to prevent fake referrals. Generally users will whitelist with a list of known Googlebot IPs, as many people will spoof Googlebot, but when Google switches up the IPs and a user inadvertently blocks Googlebot, it can take quite some time to rectify this situation, as noted in this thread from WebmasterWorld.

I have a system that prevents ‘bots from crawling my site. It has a whitelist, to which I add Google IPs. I had always added them manually because new IPs didn’t come up too often, and I wanted to make sure that no one was spoofing Google. About 10 days ago, Google apparently switched to crawling from about a dozen new IPs. I was not paying close attention to my system and those IPs got blocked. They were blocked for about 3 or 4 days.

…

The traffic picked up a little bit, but slowly. Google wasn’t adding the pages back even though they had recrawled them. Some pages came back, but some of my top pages (for example, Connor McDavid) were nowhere to be found in Google – even when I searched with my site’s name (as many users do). I tried asking Google to recrawl multiple times, but after a week they still aren’t adding back pages for which I request a recrawl.

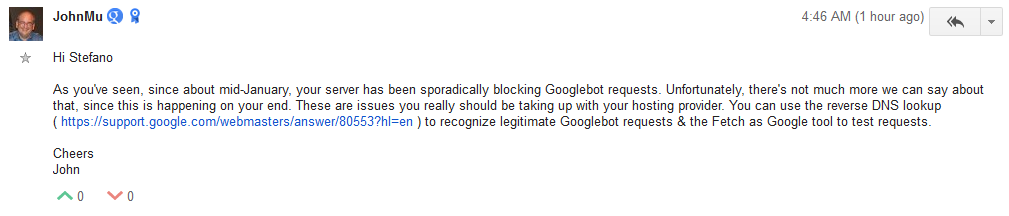

John Mueller also commented today on the Google Webmaster Help forums with the same situation, where a site is blocking Googlebot.

Hosting companies can also block Googlebot to save server resources. Many, many years ago, GoDaddy hosting blocked Googlebot from crawling all the sites they were hosting for their hosting clients.

Bottom line, if you are using any kind of bot blocking script, you will want to check Google Webmaster Tools daily (if not more than once a day) to check on any issues with Googlebot being blocked.

Jennifer Slegg

Latest posts by Jennifer Slegg (see all)

- 2022 Update for Google Quality Rater Guidelines – Big YMYL Updates - August 1, 2022

- Google Quality Rater Guidelines: The Low Quality 2021 Update - October 19, 2021

- Rethinking Affiliate Sites With Google’s Product Review Update - April 23, 2021

- New Google Quality Rater Guidelines, Update Adds Emphasis on Needs Met - October 16, 2020

- Google Updates Experiment Statistics for Quality Raters - October 6, 2020